Apple Vision Pro and subsidizing R&D

Apple's ability to leverage prior works

This is a weekly newsletter about the business of the technology industry. To receive Tanay’s Newsletter in your inbox, subscribe here for free:

Hi friends,

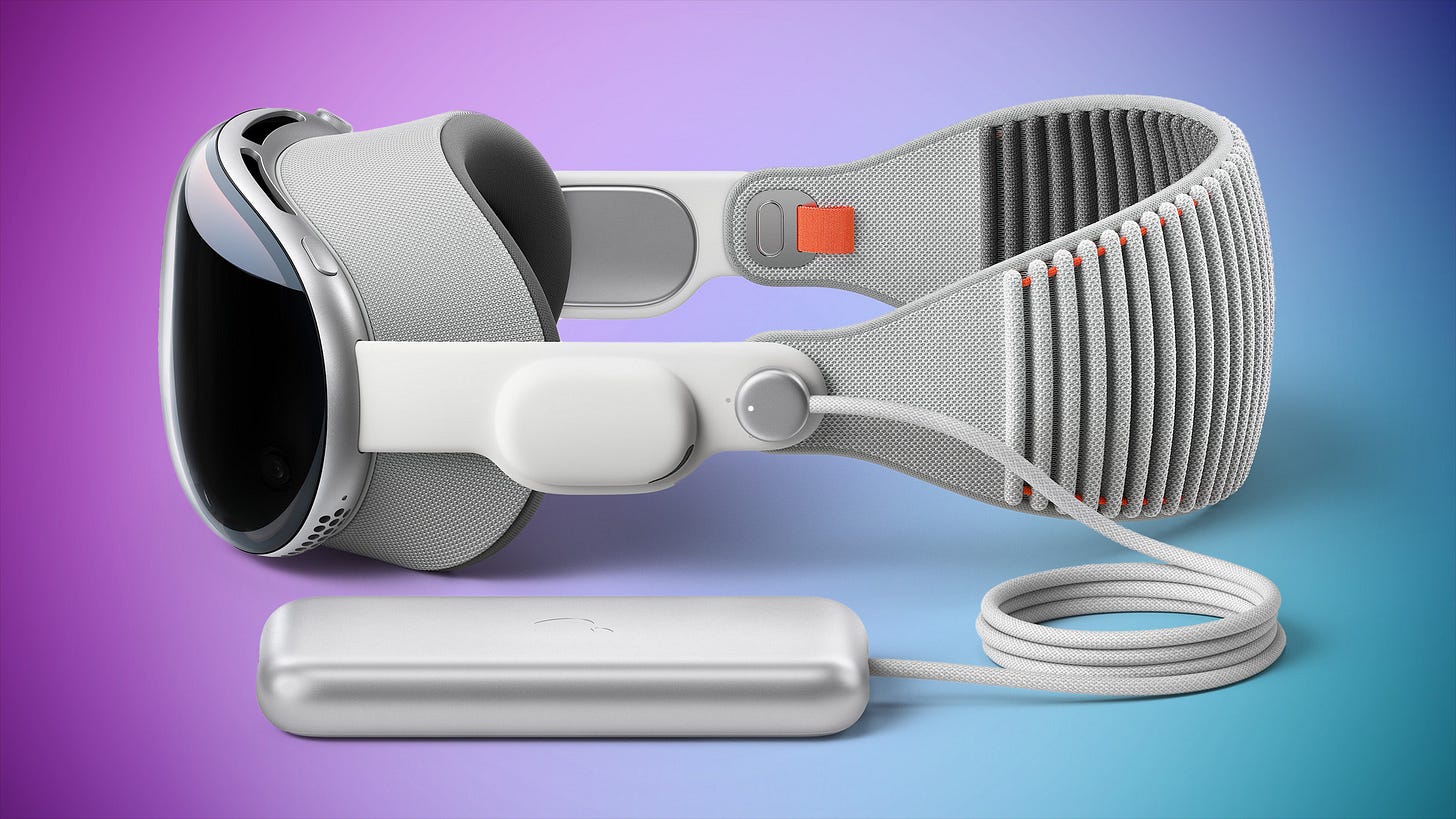

A few weeks ago Apple announced the Vision Pro. A lot has been written about the device itself, but I want to focus on one aspect: how Apple “subsidized the R&D” of Vision Pro both through others and itself. Let’s get to it!

R&D and its scale

Making big bets requires significant amounts of capital, and AR/VR devices undoubtedly fit within that category. By my estimation, Meta so far has already spent ~$45-50B of R&D to get to the Quest 3 and the platform and apps that surround it thus far1.

And this process has taken a considerable amount of time – Meta acquired Oculus for over $2B in 2014, meaning the Quest 3 and what we see today is the culmination of 9 years of R&D at Meta (plus more from the Oculus days).

Unfortunately, we don’t have a good sense of how much R&D dollars it took Apple to get to the Vision Pro. Matthew Ball has some estimates From a time perspective, reports suggest that the device has been in development since ~2018.

But maybe the more interesting part of the R&D of Apple’s device is how in some ways it feels like a culmination of the progress they’ve made through all their prior work, which allows them to “subsidize” the R&D of it.

Subsidizing Vision Pro’s R&D from prior work

The most striking aspect of watching the Vision Pro announcement for me was Apple's ability to reuse, build upon, or leverage existing components from their previous endeavors.

Let’s talk about some of the key ones:

1. M2 chip (→ Macbooks)

The Vision Pro has two chips: the M2 chip and the R1 chip. The latter is new and used for sensor data, but the M2 is one that Apple had previously developed and is used in the new line of Macbooks.

The M2 chip in the Vision Pro is what runs the VisionOS.

2. Digital Crown (→ Apple watch)

The Vision Pro headset has a digital crown that can be used to control the amount of virtual background occupying the user’s field of view.

Wearers of the Apple Watch will undoubtedly be familiar with this component, used as the primary hardware input on the Apple Watch.

3. Spatial audio (→ AirPods Pro)

The Vision Pro is billed as a spatial computing device, so it naturally supports spatial audio. which was first introduced in the AirPods Pro. Spatial audio provides a more immersive sound experience by creating the illusion that sound is coming from different directions.

4. Siri (→ iOS)

Siri was first integrated into the iPhone 4S in 2011, and since then has been integrated into all Apple devices. The Vision Pro is no exception. In fact, given the interface, Siri and in general speech recognition and voice-based control may become one of the primary ways of interacting with the Vision Pro.

5. Apps and Services (→ iOS)

While the Vision Pro features a new OS called VisionOS, it was largely developed on top of iOS and allows for several existing Apple digital products (Music, TV+, games from Apple Arcade, Facetime) to work seamlessly on the device.

In addition, 100s and thousands of popular iOS apps will also be supported on the device from the get-go, including productivity apps such as Microsoft’s suite and Zoom.

In addition, given Apple’s existing developer base, you can bet that creating apps for the VisionOS will be more accessible for a wide developer base than say the Quest.

6. Others

There are other smaller similarities such as the Vision Pro strap being reminiscent of the AirPods Max, Optic ID likely leveraging some of the FaceID work, and Eyetracking leveraging some of the accessibility work (or being used for?) on the iPad.

Overall, it almost felt like Apple’s natural product evolution has been leading to the Vision Pro.

Subsidizing R&D by learning from peers

The other aspect of the launch was the timing here and what Apple was able to learn from others’ work ahead of the launch. Depending on the definition that one uses (and some will disagree), the iPhone wasn’t the first smartphone but changed the category and industry and is regarded by many as the first true smartphone as we know it today.

The Vision Pro is not the first AR/VR device — Quest has already sold over 10M units here – even though it is potentially a game-changer in the category.

So in some ways, Apple has also been able to learn and subsidize its R&D from Meta and others. Rather than building, shipping, and iterating, Apple has been able to see the market’s reaction and iteration of the Quest and decide what it likes there vs. what it doesn’t.

While people may argue that the Vision Pro was likely designed with a completely independent vision, it’s also the case that Apple learned from what worked (or didn’t work) with the quest, and in some sense was able to get to a better first version than without those learnings.

A few decisions that may have been influenced by Meta’s devices include:

The use of gestures and hand tracking instead of controllers (Quest moved from controllers to both over time)

The use of a controllable mixed reality mode (Quest also supports a pass-through mode, but one that isn’t as good as Vision Pro’s appears to be)

Decisions around the quality of the screen, etc based on VR not having “taken off” yet (It’s interesting that the device is called the “Pro” indicating a lower-priced version over time).

Ultimately, the differences may be wholly informed by the difference in philosophies between the companies as Zuckerberg alludes to below. But it’s also possible Vision Pro’s strategy was in part informed by seeing what was and wasn’t working for the Quest.

To conclude, here’s a tweet that encapsulates the Vision Pro situation well.

Thanks for reading and to Wiley for his tweet that inspired this post! If you liked this post, give it a heart up above to help others find it or share it with your friends.

If you have any comments or thoughts, feel free to tweet at me.

If you’re not a subscriber, you can subscribe for free below. I write about things related to technology and business once a week on Mondays.

The real number may be somewhat lower since it includes spending on other devices such as Portal and Ray-ban Stories

Loved this perspective. Thank you!

You touched on this at the end of #5 and it can't be stressed enough: the Apple developer frameworks are a huge advantage. SwiftUI (iOS 13+), ARKit (iOS 11+), RealityKit(iOS 13+) have been available to developers for years (iOS 11 launched in 2017). There have been few real use cases for ARKit and RealityKit so far on iPhone/iPad, but Apple's continued investment over the years, the developer familiarity and feedback, and the use of these frameworks on hundreds of millions devices may give Apple a considerable leg up.