AI’s Higher Acceptance Bar and How to Clear It

On the acceptance criteria for AI and what builders can do about it

This is a weekly newsletter about the business of the technology industry. To receive Tanay’s Newsletter in your inbox, subscribe here for free:

Hi friends,

About 40,000 Americans died in traffic crashes last year. According to Waymo’s latest safety data1, a fully driverless fleet could prevent about 80–85% of injury-causing collisions if scaled nationwide—that’s over 30,000 lives saved annually. Yet, each minor robotaxi mishap dominates the news cycle. We accept 110 human deaths every day on our roads, yet recoil at even a handful of autonomous vehicle accidents.

That gap is the AI acceptance bar. It appears whenever machines starts making decisions that used to belong to humans. Builders can’t wish it away, but they can design for it. Below is a more detailed playbook for doing exactly that.

Why the Bar Is Higher for Machines

Black-Box Anxiety – Humans can always ask each other, “What were you thinking?” Neural networks, however, feel opaque—making errors appear mysterious and unfixable. Even when outcomes are statistically better overall, mistakes that happen without our direct control disturb us deeply. This perceived loss of agency amplifies the emotional severity of errors, regardless of actual impact.

Headline Asymmetry – Human errors are mundane; machine errors feel novel. One spectacular AI failure grabs more headlines than a thousand silent successes.

Expectation of Perfection – Because we built these systems, we expect them to be flawless. A 2% error rate that seems “good enough” for humans feels “unacceptable” for software.

Knowing these instincts shapes the product and rollout choices that follow.

Clearing the Bar: Five Tactics for Builders

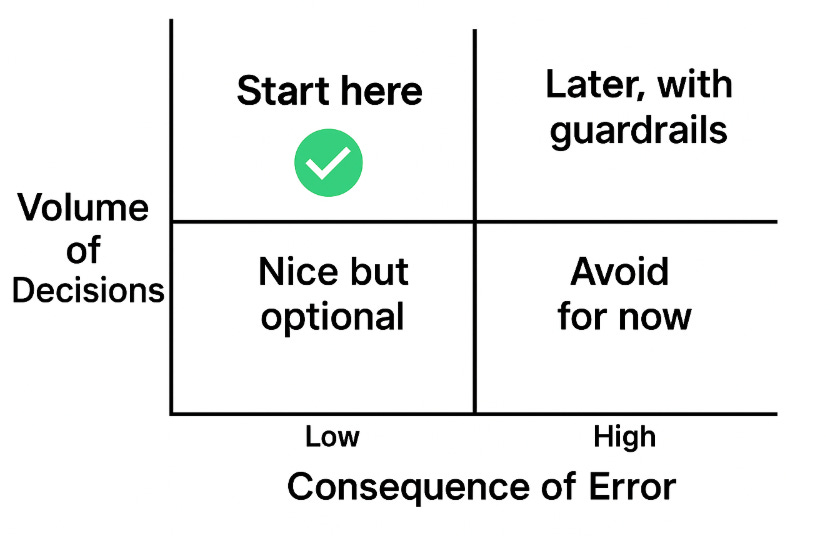

1. Start Where Errors Are Cheap

Begin with high-volume, low-consequence-of-error tasks within your domain. Millions of safe repetitions deliver clear ROI, making occasional errors merely minor inconveniences. Each small win builds trust that will support you as you move into higher-stakes territory.

2. Turn the Black Box Into a Glass Box

Black‑box anxiety melts when users can peek inside:

Surface confidence (“84 % cetain”), highlight the top inputs, and link to a plain‑English explanation.

Auto‑escalate the edge‑cases. Anything below a confidence threshold routes to a human reviewer.

Keep a one‑click rollback. Knowing there’s an “undo” lowers the psychological cost of trying something new.

3. Communicate Error Rates & Contingency Plans

Avoid vague claims about your model’s accuracy. Instead, conduct a direct comparison against human performance and share precise results (e.g., “three critical mistakes per two million predictions, versus 24 by humans—an 8× improvement”). Pair this with a clear contingency plan: detail the exact error thresholds that trigger automatic throttling or immediate human review, and offer real-time updates akin to a “status page” for quality assurance.

When buyers see both superior performance and robust safety controls, they perceive your AI not just as smarter, but operationally safer.

4. Climb the Risk Ladder, Rung by Rung

Adopt a gradual, crawl-walk-run progression to build customer buy-in:

Shadow mode: AI observes and suggests; humans execute. Success metric: Parity with human judgment.

Human-in-the-loop: AI drafts; humans approve. Success metric: Fewer than X% edits.

Guard-railed autonomy: AI independently handles safe scenarios, escalating complex cases. Success metric: Timely escalation and meeting business KPIs.

Full autonomy: Humans handle only anomalies.

Gate each step with hard thresholds and share the criteria with customers. Each step produces real‑world evidence that earns permission for the next, and its possible that different workflows begin in and remain at different steps given their importance.

5. Productize Risk Management

With AI products, risk management is a critical product feature, not merely legal fine print.

Beyond transparency (as outlined in tactic #2), post-error accountability is essential to building organizational trust. Key features include:

Audit Trail API: Immutable logs tracking every AI decision with explanations.

Automatic Kill Switch: Clearly defined error budgets trigger automatic throttling or system pauses when exceeded.

Closing Thoughts

The heightened bar for AI acceptance, though challenging, presents a clear opportunity to differentiate and gain competitive advantage for enterprises that understand it deeply. Teams that proactively address concerns by starting small, prioritizing transparency, rigorously demonstrating performance, and methodically managing risk will outpace those waiting for public perception to shift.