Unpacking Apple Intelligence

A few takeaways from the Apple Intelligence announcement

This is a weekly newsletter about the business of the technology industry. To receive Tanay’s Newsletter in your inbox, subscribe here for free:

Hi friends,

Apple made waves at WWDC 2024 with the introduction of Apple Intelligence, what can be described as an OS-level agents platforms that could mark the acceleration of adoption of agents. The core of Apple Intelligence is its ability to combine powerful generative models with the user's personal context, all while maintaining Apple's stringent privacy standards. This week, I’ll unpack the announcement and discuss a few key takeaways.

The Announcement

At its heart, Apple Intelligence marks a significant upgrade to Siri, transforming it into a more contextually aware and capable assistant. While the integration with ChatGPT was talked up in the demo, some mistakenly concluded that all Apple announced was a “ChatGPT” integration at the OS level.

Instead, “Apple intelligence” is a bit more sophisticated than that and comprises of a few key elements:

Models: A suite of Apple’s on-device models that are fine-tuned for use-cases such as writing text, generating images, prioritizing notifications, taking actions across applications and A suite of larger models on Apple’s servers that are also prioritized for the same use cases.

Orchestrator: The ability to depending on the user’s question determine whether the on-device models, Apple’s server models or ChatGPT should be used to handle the request (asking the user for permission in the ChatGPT case)

Semantic Index: Essentially a database which indexes the semantic meaning of all the files, photos, emails, etc on a user’s device, which it can then retrieve as needed when performing a query for the user.

App Intents Toolbox: A database of all the various actions and functions across apps that are available on the user’s device which Siri can take to fulfill a query. Examples may include sending an email through the mail app, editing a photo through the Photos app or sending a calendar invite.

At a high-level, the system can be thought of as having the ability to perform Retrieval Augmented Generation across all the data that the user has on their device, route it to the appropriate model, and then take actions in the appropriate application to perform tasks for the user.

A Few Takeaways

With the detail on that announcement, let’s discuss a few takeaways:

Blazing Fast On-Device Models

A standout feature of Apple Intelligence is its emphasis on on-device processing. By running AI models directly on devices like the iPhone 15 Pro, Apple ensures that tasks are performed with minimal latency. Apple leverages two proprietary 3B parameter models: one language model and one diffusion model.

The models have a token latency of just 0.6 milliseconds. This means users experience lightning-fast responses without their data ever leaving their device for a subset of queries.

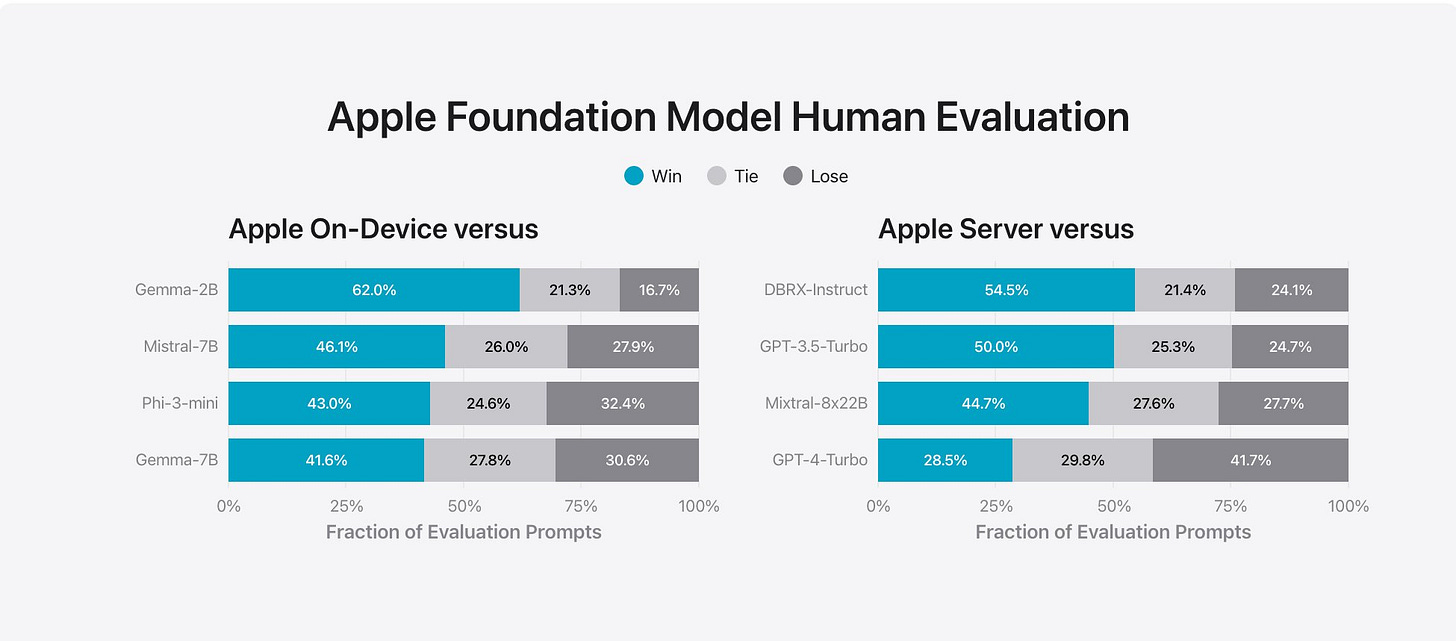

While the model is limited in size and doesn’t say have access to world knowledge, it stacks up pretty well for the set of tasks its focused on around text generation/summarization and app commands. The human evaluation is below.

Apple’s launches should result in an increased focus on more vendors trying to run some smaller models on-device, which have privacy and latency benefits, and also cost benefits.

Private Cloud Compute and Apple’s Privacy Focus

For queries which need larger models than the on-device models, Apple has some Apple Server models, which will run in Apple’s Privacy Cloud Compute.

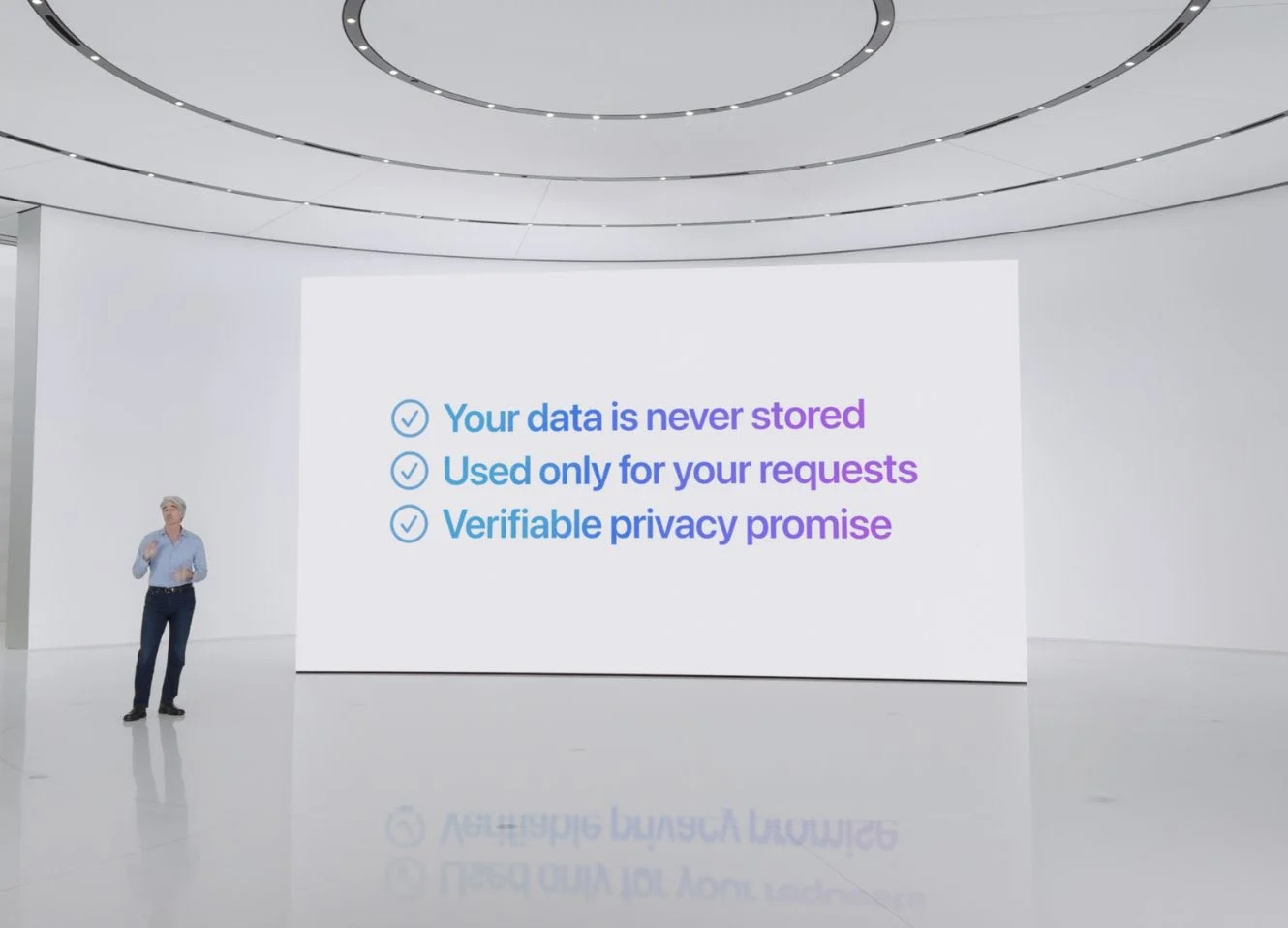

Apple's Private Cloud Compute (PCC) represents a cloud intelligence system for private AI processing. PCC allows for complex AI tasks to be executed in the cloud without storing user data there. This means personal information remains secure and inaccessible, even to Apple. The PCC system extends the privacy and security that Apple devices are known for into the cloud, ensuring that user data is used solely for executing requests and is not retained.

While today, only Apple’s server models will support this private approach, it would be interesting to see if over time they partner with other model providers to make their models available in this manner.

The ChatGPT Partnership for Free

In addition to their on-device and server models, which are largely focused on answering questions related to personal context, Apple also has an integration with OpenAI’s ChatGPT, which it seems like Siri will call for questions relating to world knowledge per a Tim Cook interview with Marques Brownlee.

ChatGPT will be made available for free for those queries to the user, even if they aren’t a subscriber (which perhaps isn’t too surprising now that OpenAI’s GPT-4o model is available for free anyway). More notably, Apple is not paying OpenAI for this integration.

Instead, they’re “paying OpenAI” through the distribution to the over 1B Apple users. From Bloomberg:

Apple isn't paying OpenAI as part of the partnership, said the people, who asked not to be identified because the deal terms are private. Instead, Apple believes pushing OpenAI's brand and technology to hundreds of millions of its devices is of equal or greater value than monetary payments, these people said.

And from a data perspective, OpenAI will not be able to train on the data it seems, although they do still obviously receive the prompt for since for now their models are not running on Apple’s private cloud compute discussed above.

Still, if it does drive ChatGPT plus subscriptions for OpenAI, the deal could be a huge success.

Acceleration of AI Agents?

Apple Intelligence could signify a major step towards AI agents given how deeply integrated it is into the OS. It will out-of-the-box work well to both retrieve context across all of Apple’s products (contacts, emails, photos, etc) and take actions in Apple’s applications (calendar, email, photos, etc).

Some of the demos Apple showcased were relatively simple and in essence were:

“When is my mom’s flight landing?”

“What’s my plan for dinner?”

“What’s the weather at X? Can you create an event for that.”

But as more 3p app developers open up the information in their apps to the semantic index, and also enable various actions taken in their application for Siri by integrating app intents (which has existed for a few years now), it could become a powerful personal assistant to simplify and enhance everyday interactions.

It’ll be interesting to see if it catches on with developers and indeed with end users ad whether it will be enough to breathe new life into Siri.

Closing Thoughts

Overall, Apple intelligence seems like a well-thought out initial foray into AI by Apple that prioritizes privacy and ease of use for end users. It’s a good mix of RAG across all user data, a suite of models including on-device all the way to GPT-4o, and the ability to take actions across apps.

It will be interesting to see if it results in true agentic use cases, or remains used for relatively simple tasks or queries as Siri is largely today.

Since it is available only on Apple 15 Pro and onward devices, it also seems like Apple’s bet to reduce the replacement cycle between iPhones which has been growing, which stock markets certainly believe it may.