The State of Generative AI Part 2

Where we are and where we are going in audio and video

This is a weekly newsletter about the business of the technology industry. To receive Tanay’s Newsletter in your inbox, subscribe here for free:

Hi friends,

Last week, I wrote about the rapid development of Generative AI, and broke it down by medium generated, discussing the first three. Today, I’ll discuss the remaining three.

Text

Code

Image

Audio

Video

Multi-modal

I recommend reading that piece before this one for a discussion on text, code, and images.

Audio

When it comes to using Generative AI for audio, the obvious thing to discuss is audio or voice synthesis.

First, let’s discuss where we are in terms of the state of audio models.

Broadly, I think of audio as music and non-music/voice.

On the voice side, Microsoft recently announced VALL-E, a model which is capable of taking 3 seconds of someone’s voice and synthesizing it for use in any application. It can then take any text prompt and output that text as audio in the person’s voice with the ability to control emotion.

On the music side, there are several companies working on models which generate music. Examples include Riffusion, which uses stable diffusion to create “images” of spectrograms which are then converted to audio, and Dance Diffusion (by Harmonai) which can generate music and be fine-tuned on specific albums

Use Cases

So what are the use cases for audio synthesis?

I. Media and Advertising

Being able to generate specific voices represents a big unlock in Media, where audio synthesis can be used in video games, movies and television for things like dubbing to different languages in the same voice of an actor, creating new characters with specific voices in video grams or just speeding up the filming or editing process in movies/TV.

Examples of companies in this space include:

Resemble.ai has its voice cloning models and is used for audio generation across movie and TV use cases among others. It has been used in documentaries by Netflix.

Wellsaid Labs can create AI voices that can then be used for audio/video ads or media use cases.

Papercup which focuses on generating synthetic voices to dub TV and movies in other languages.

II. Call Centers

Call centers rely on having a set of high-quality voices that have low latency for real-time use cases. Often, they only had a few voices to choose from, from big vendors like Google and Microsoft.

With audio generation, one can imagine voices and accents local to someone’s region being generated to respond to their customer support requests.

Rime is one of the companies working in this space, though some of the ones mentioned above also support real-time requirements needed for these use cases.

III. Narration and Accessibility

The synthetic voices generated by AI can also be used in narrations for use cases such as audiobooks, hardware devices such as smart speakers, text-to-speech accessibility options in web browsers, and other similar use cases including education.

For these, most of the companies that generate voices and have a text-to-speech API can be used as a solution.

IV. Music Generation

Generating music or assisting in the generation of music is another prevalent use case. Aside from the music models that can be used directly, companies are building applications in this space including:

Boomy allows users to generate music and upload them to music streaming platforms and generate revenue. Boomy users have already generated >10M songs.

Soundraw allows users to choose the mood, genre, length, and other attributes and generates music for them.

Moises assists musicians in their creative process by leveraging AI to let them separate vocals, modify beats, change pitch, and more.

V. Audio Transcription

Audio transcription isn’t technically “Generative AI” but in many cases to get value from the Audio it is first converted to text, and Large Language Models prove very useful at that.

Whisper by OpenAI is an open-source model that can be used for transcription and speech recognition.

Deepgram is one of the leaders in leveraging AI for voice transcription and speech recognition and understanding both in real-time and offline.

Video

Video in some ways is the ultimate boss of Generative AI since to do it properly in its end state likely requires doing images, audio, and text to some extent.

Today, we don’t have any real models in the wild that can do general video generation a la images or text. But, progress is being made, with a number of companies working on this:

Make-A-Video by Meta, while not publicly available, demonstrates the ability to create a simplistic video from a text prompt or an image, as below:

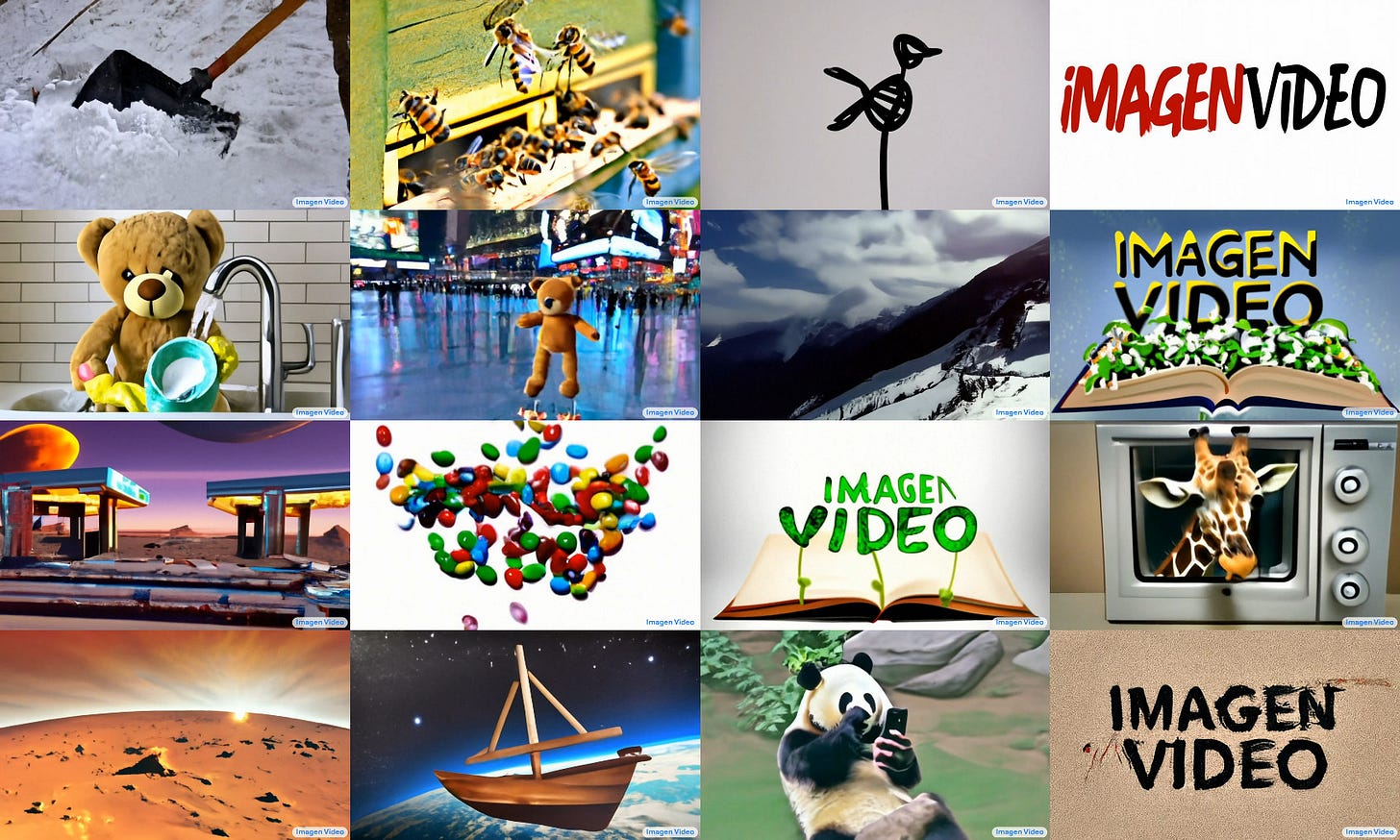

Imagen by Google is another model which can create a 24fps video from a text prompt and is currently not available publicly.

Runway, which builds an AI-based video editing product, has teased a text-to-video model, though it hasn’t been made available yet.

OpenAI has confirmed that they are working on a video generation model, but don’t have a definitive timeline.

“It will come. I wouldn’t want to make a confident prediction about when. “We’ll try to do it, other people will try to do it ... It’s a legitimate research project. It could be pretty soon; it could take a while.” - Sam Altman, CEO of OpenAI

Use Cases

While fully general video generation may not be available yet, there are still several use cases that are already solvable today and that set is only continuing to expand.

I. Sales, Training, and Support

One kind of video that can be easily generated today using AI is those of human avatars saying specific tracks. There are a number of companies working to make it simple to generate these Avatar videos for use cases such as sales (personalized reach outs at scale), training, customer support and more.

Some examples of companies include:

Synthesia which is an AI-based avatar video creation platform used across sales and training use cases (below is a video of it in action)

Rephrase is another AI-based avatar video creation platform used for personalizing video messaging in marketing and sales-type use cases.

D-id is another of these platforms used more for training, learning and corporate comms use cases.

II. Marketing

Increasingly, the default ad format on channels such as Facebook, Instagram and TikTok is video ads. However, they’re quite difficult and expensive to create today, especially for SMBs. As Generative Video evolves, companies will aim to directly take an image of a product/service from the business and autogenerate a 10-30 second video ad creative for them likely with more than just a talking avatar.

While I’ve not come across any that can do this fully yet (other than just avatar ones), one can see how Meta’s Make a Video/Google’s Imagen might be leveraged to convert an image into a short video.

Similarly, companies like Omneky are working to generate ad assets (initially for images) and understand what drivers are causing certain ones to perform better than others, which can then be used to craft the perfect video assets

III. Insights Extraction

A lot of knowledge and insights is contained in the video medium including meetings and other conversations which can be hard to parse, search or scan relative to the text. Fortunately, there are companies working directly on making use of that knowledge. While not quite “Generative Video”, these companies leverage LLMs nonetheless to summarize, synthesize and extract insights from videos.

Examples include:

Gong which is pulling data from video calls (among other things) and then extracting insights to help Sales reps perform better.

Fathom helps summarize video meetings and identify to-dos and action items.

In general, we’re likely to see many more ways of making videos indexed, searchable and useful, as I tweeted about here:

IV. Consumer Social

While we’re not quite there yet, it’s not hard to imagine a world where a lot of the videos we see on TikTok and Instagram Reels will be essentially generated by AI. In particular, videos that tend to have a specific formula and are more constrained in what they show (i.e., the avatar use cases above) will be easy to pick off first.

As one datapoint, the hashtag #deepfake has 1.3B views, with multiple videos going viral daily. Here is one example of Harry Styles.

So far, I’ve not come across a product that can easily wholly generate videos beyond simple avatar talking videos, but AI tools have made it significantly easier to create / edit videos across this and other use cases.

Multi-Modal

To close, I want to touch on multi-modality. In some sense, Video is already multi-modal in that it will require stitching together Audio, Images, and (likely) Text, but there are a few other things worth considering.

I. Multi-modal image/text /video

Today, most of the image models themselves are unable to output text in the output image. But in many cases, such as design/marketing, one might want text overlaid on the image which has to factor in the style of the image and what is on the image to know where to place it. A similar thing may be needed in videos.

This is an area where companies working on applications will likely have to plug gaps in for now with post-processing layers on their image/video models.

Similarly, another example is that of storytelling. Most stories and presentations contain a mix of text and images, in context.

One example of a company doing this is Tome which is an AI-powered storytelling platform that can generate presentations of texts and images via a text prompt. I gave it a prompt to create a presentation about the State of Generative AI and this is what it created below:

Another interesting use case here is Chat interfaces. While ChatGPT has shown a lot of people just what might be possible with AI, today it responds with just text. A future advancement might be that it can respond with images or videos as well, depending on the need.

For example, Ex-human is a company building chatbots that can be multi-modal and respond with images and memes as needed.

II. Actions

Generating Images, Text and others is nice, but what would be great is if AI could also interact with our programs depending on what we tell it and take actions on our behalf.

Actions could be simple life / personal task type actions such as:

cancel my ticket

change my flight

remind me to do X

purchase X product

Now, some of these already are doable to varying degrees of accuracy and success with Siri/Alexa/Others.

But they could be more complicated such as taking arbitrary actions on any interface, even those that aren’t pre-built with integrations.

One might type in a prompt as text or say something as audio, and the output may be an action.

This is another area where I think a lot of companies will work on, either directly by aiming for artificial general intelligence, or in constrained spaces such as more useful AI assistants (no offense to Siri).

Adept is one of the interesting companies in this space, working towards a foundation model for actions, and has built an “Action” Transformer.

This type of approach could essentially be a new form of interaction with interfaces or can be thought of as RPA on steroids.

In the example below, an instruction of adding a new to lead to Salesforce via a text input is completed by the AI.

Thanks for reading! If you liked this post, give it a heart up above to help others find it or share it with your friends.

If you have any comments or thoughts, feel free to tweet at me.

If you’re not a subscriber, you can subscribe for free below. I write about things related to technology and business once a week on Mondays.