The State of AI Gross Margins in 2025

What the stack earns today and considerations for AI builders

This is a weekly newsletter about the business of the technology industry. To receive Tanay’s Newsletter in your inbox, subscribe here for free:

Hi friends,

I hope everyone had a great labor day weekend. There has been a lot of discussion about gross margins in AI land recently, so I figured I would chime in with some thoughts. First, I’ll give a quick lay of the land of where we’re at gross margins wise across the stack. Then, a short list of considerations on the gross margin debate for builders across the stack, particularly at the application layer.

Lay of the Land on Gross Margins

Chips: Nvidia still captures premium economics. Excluding one-off charges, Gross margins for chips hover in the 70% range consistently across quarters.

Cloud: Hyperscalers do not break out product gross margin cleanly for AI, so operating margin and corporate gross margin disclosures are the best signals. AWS reported 33% percent operating margin for the quarter and 36.7% on a trailing twelve month basis. Microsoft reported Microsoft Cloud gross margin percentage at 69 percent, with a note that AI infrastructure scaling is pressuring the percentage. Google Cloud delivered roughly a ~21% operating margin. Net, the platforms are monetizing AI demand even while they fund the buildout. However, AI is pressuring gross margins, as called out by Microsoft, and some of my estimates have the gross margins in the 50-55% range, though they could be lower for the other clouds.

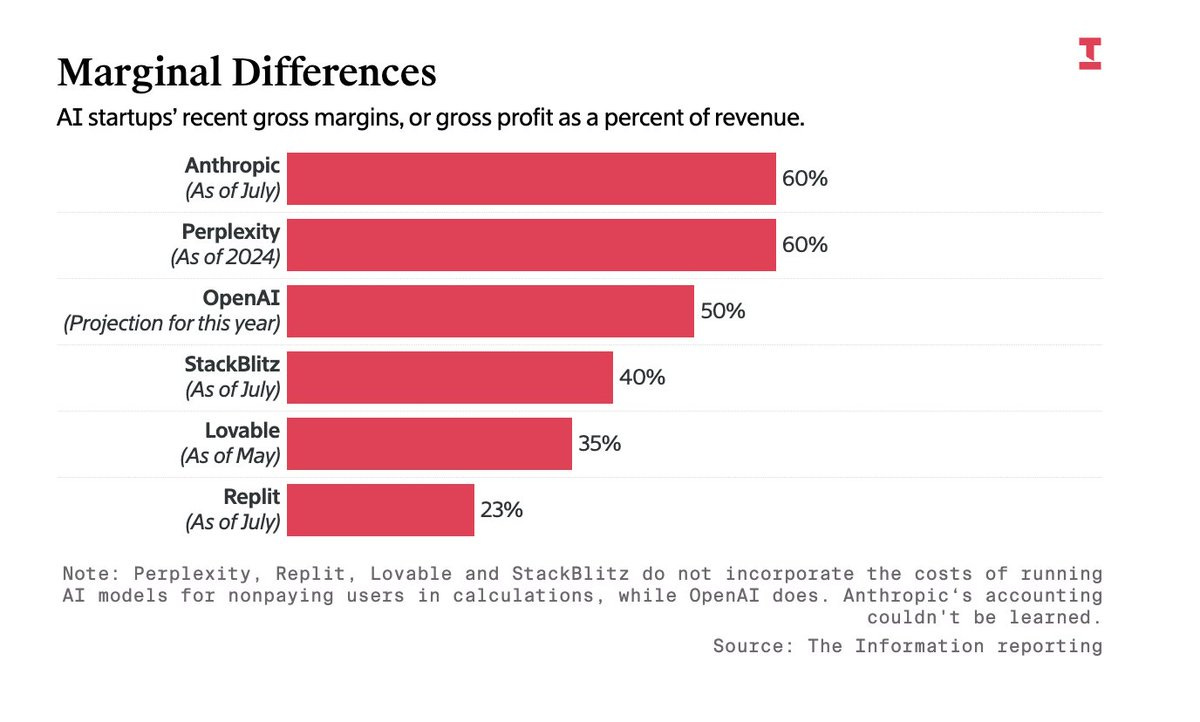

Models: Outside estimates peg OpenAI’s gross margin roughly around ~50% while Anthropic’s numbers per the Information also hover around 60%. It should be noted that their are a number of business lines such as the API and the consumer product which make up those numbers, and each looks pretty different. It should also be noted that training costs do not fall under these gross margins.

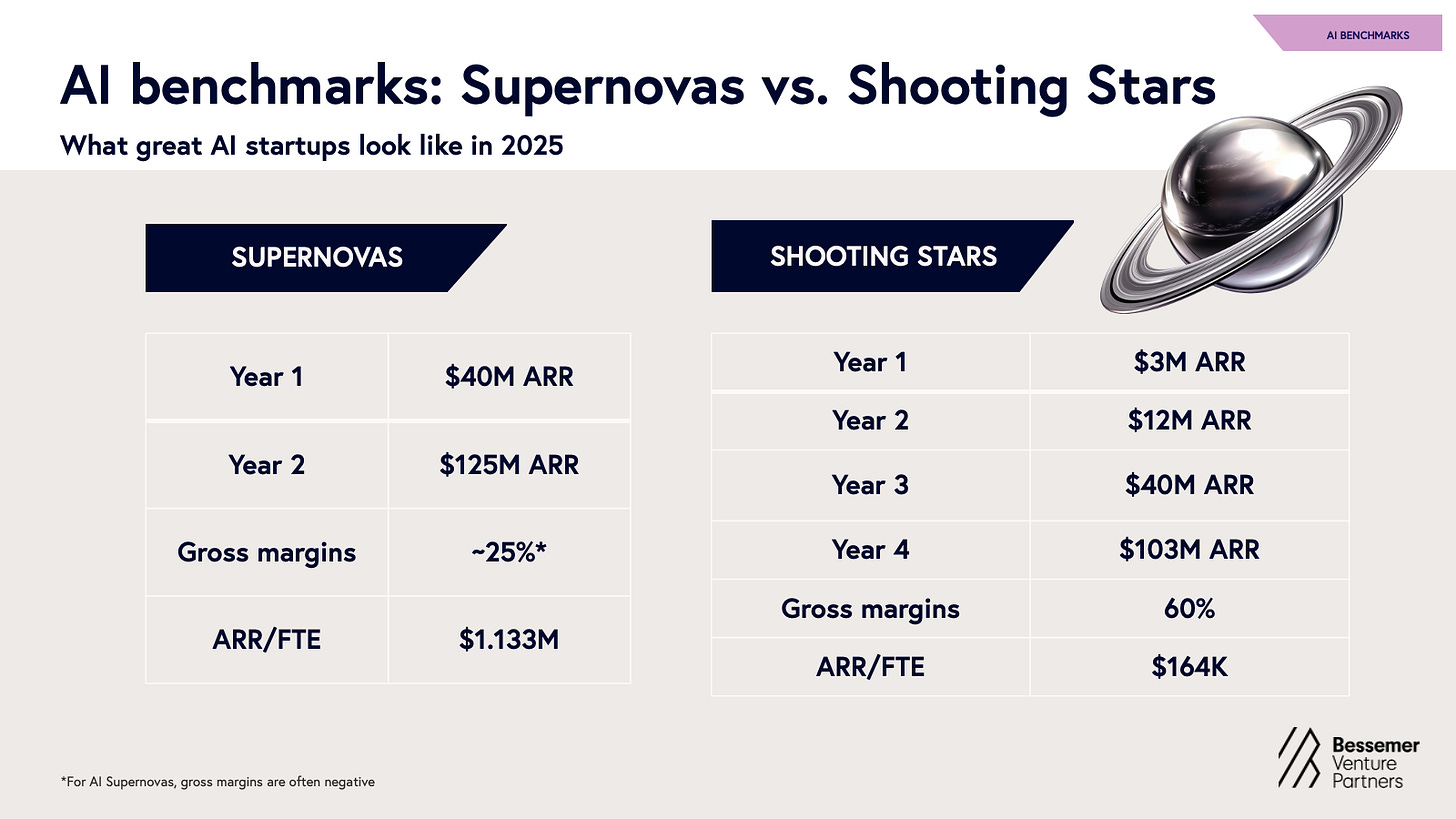

Applications. This is where the spread is widest. Bessemer’s 2025 dataset shows fast-ramping AI “Supernovas” averaging about 25% gross margin early on, while steadier “Shooting Stars” trend closer to 60%. They also note that many of the AI Supernovas have negative gross margins, something we don’t tend to see often in software.

Additionally, the way companies are calculating their own gross margins may be different from each other, as the chart below from The Information notes. Some companies include the cost of free users within it, while others do not.

A Few Considerations on Gross Margins

The Application layer is the area which has seen the widest dispersion in gross margins, and I’ll be touching on a few considerations for builders to keep in mind. Coding apps are probably where the Gross margin debates come up most heavily, so I’ll use those as examples throughout.

1) Inference costs are falling, but the benefits accrue unevenly

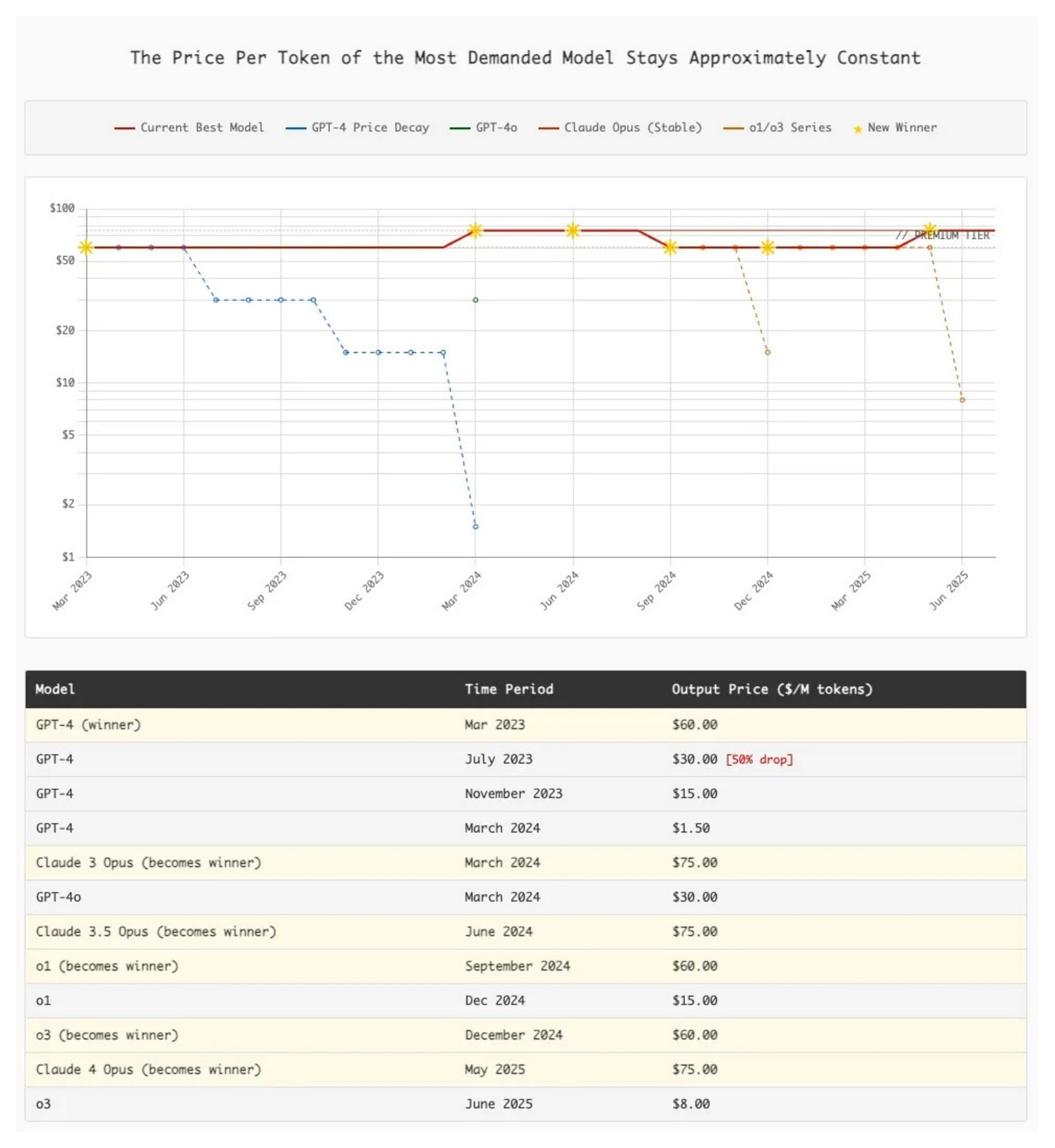

There’s been a lot of talk about inference costs falling 80-90%+ per year. And while that’s true for a given model, the price of top-end models has stayed steady or even gone up as Ethan noted in the excellent analysis and piece below.

The key question is simple. Does your use case require the top model on every request, or do you only need to meet a quality bar. If you can meet a bar, routing lets you send most traffic to cheaper models and burst to the frontier when needed. If your users demand the best every time, you need pricing that mirrors usage, or margins will compress as quality expectations rise.

2) Control and workflow depth decide unit economics.

Tied to the above, when users insist on the highest performing model, a companies COGS rides someone else’s price card, the model provider. Coding assistants feel this more than almost any other category because quality is visible on every keystroke. In fixed workflows like document processing or voice IVR, vendors own the acceptance criteria, which lets them route to cheaper models by default and escalate only on hard cases. Over time, that gives them the opportunity to push app-layer margins toward SaaS-like territory.

Additionally, workflow depth matters as well. Many AI companies are derided as “wrappers” and given as the reason for low gross margins. Classic SaaS was, in practice, a wrapper over databases and public cloud too, yet it sustained 70-80% gross margins. The path is to get deeper and take control so buyers see more than “access to a model.” Control which is discussed above is one area, but good old workflow depth continues to be another. Collaboration, versioning, audit, analytics, governance, and integrations into the rest of the stack as well as going deeper into the workflows continue to be tried and tested ways to grow ACVs without large increases in AI COGS.

If you don’t yet receive Tanay's newsletter in your email inbox, please join the 10,000+ subscribers who do:

3) Do not live or die on tokens alone

As hinted at above, there are more ways to make money than marking up tokens. Many of the companies, even in areas like coding and vibecoding where a lot of the value comes from the model itself, are seeking other opportunities to monetise which will not be tied to AI inference costs and so grow aggregate Gross Margins. A few of these examples include

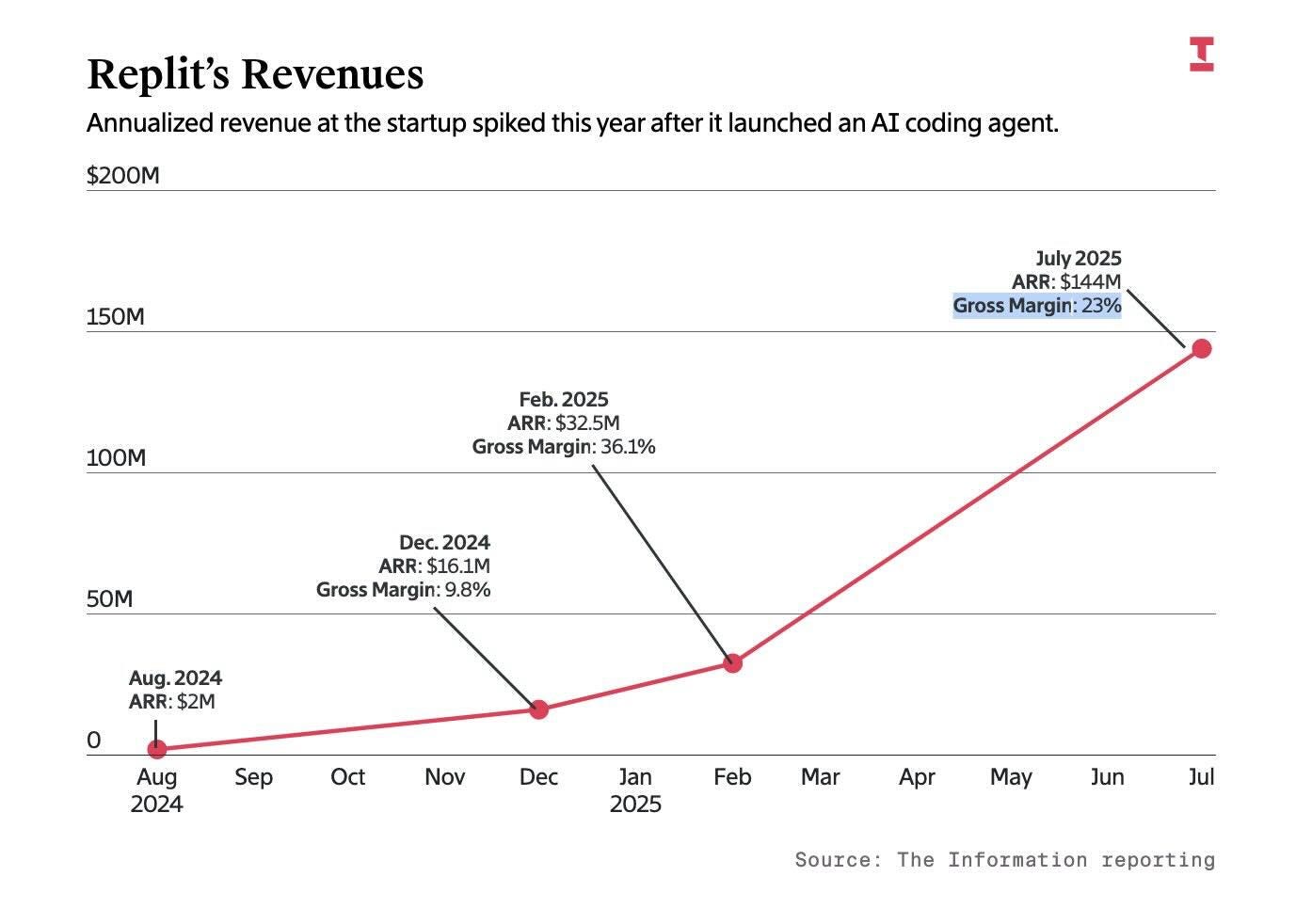

Hosting and deploy. Bolt and Replit monetize runtime, bandwidth, storage, domains, and private deployments once projects live inside their environments. That raises ARPU and decouples margin from token pricing.

Marketplaces and services. Replit’s Bounties take a ten percent fee from posters, which is a clean non-inference take rate.

Advertising / Affiliate. OpenAI has been piloting checkout inside ChatGPT with Shopify, which would create commission revenue on the free tier. Perplexity has been testing sponsored follow-up questions. It’s likely only a matter of time when some forms of ads finds its way to most consumer chatbots.

4) Pricing models still need work and iteration

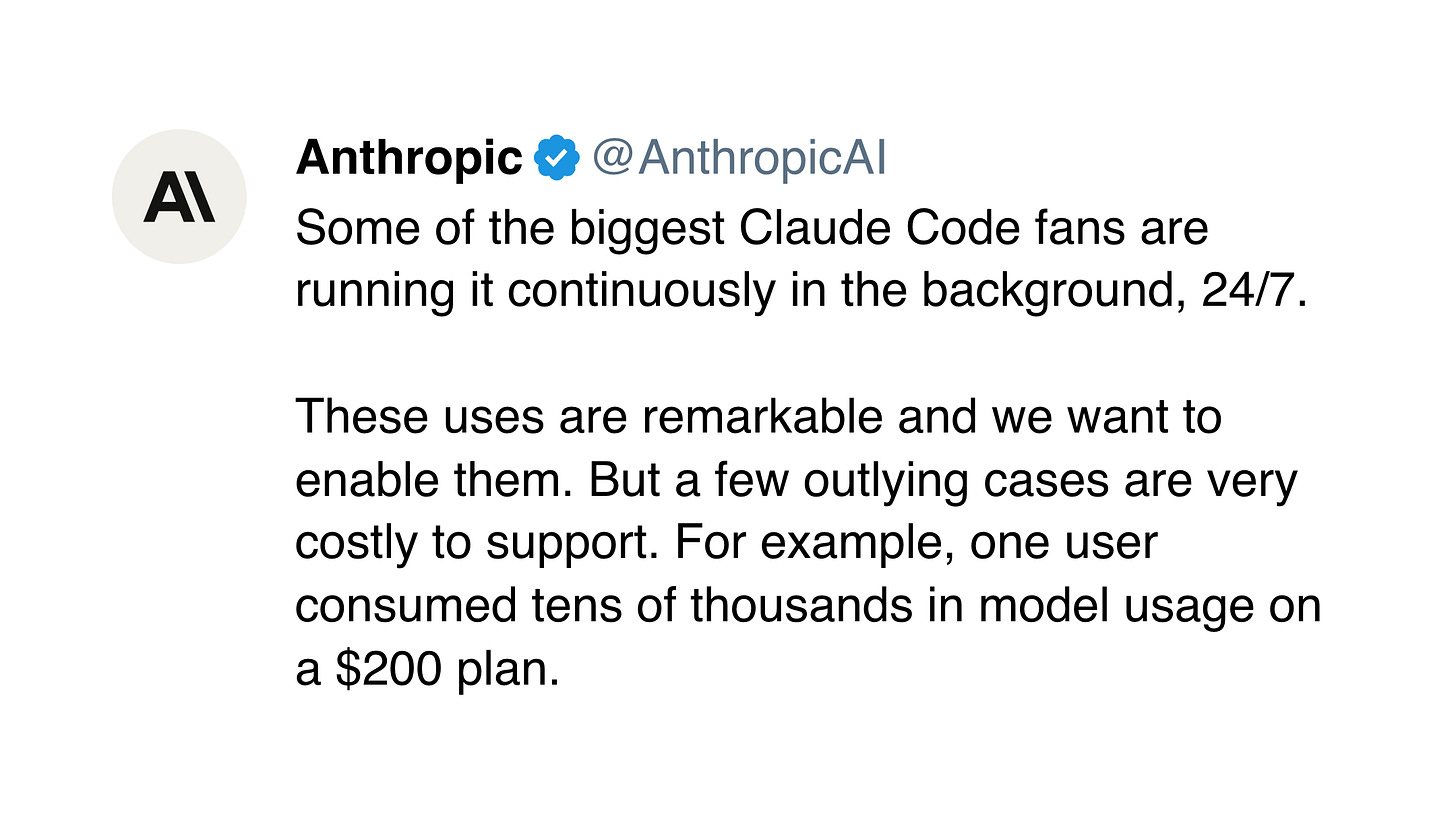

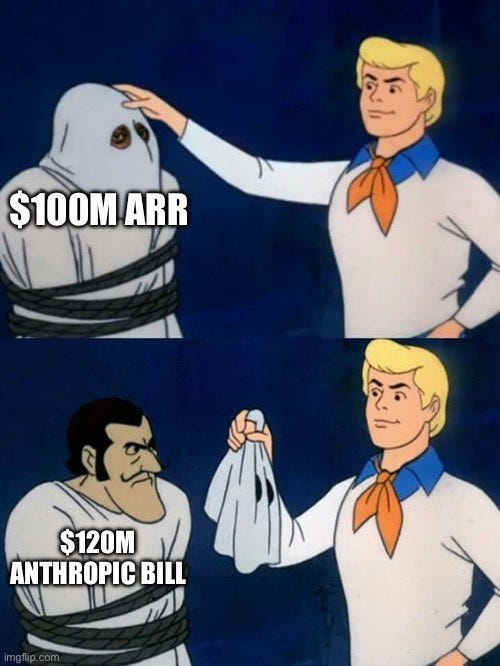

Most companies started with simple per-seat plans and ran into power-user cost spikes. The shift toward other pricing models that make costs visible and predictable. Examples include seat plus pooled credits, usage with an included allowance and clear pass-through, token bundles with rollover, and BYOK for heavy cohorts. Replit has iterated across these ideas, pairing seats with credits, layering pay-as-you-go, and adjusting tiers as usage patterns change. Anthropic noted that on their original pricing structure of Claude Code, they were losing tens of thousands of dollars per month on a user on a $200 plan.

Pricing models will continue to evolve and be a lever where companies can play around with to make gross margins improve, particularly if hurt by a small subset of extreme power users.

The chart below shows Replit’s gross margins change drastically over time while revenue continued to grow as they adapted pricing models.

5) Net Margins Matter

There’s been a lot of emphasis in recent weeks on the gross margin profiles of these companies. But what’s not discussed as much is that ultimately it’s a trade-off these companies are making where they trade-off gross margins for faster growth and lower costs in other areas.

You can trade some gross margin for distribution if product-led growth is truly working which results in faster revenue growth and leads to lower sales and marketing (and perhaps even G&A) as a percentage of revenue. The Supernova pattern that Bessemer highlighted is exactly that. Very high ARR per employee and thin early gross margins that thicken over time with the above additions, but much lower S&M, G&A as a percentage of revenue than their SaaS counterparts given the fast scale and the bottoms up motion truly working.

The goal is not a perfect gross margin in isolation. The target is a healthy net margin profile as cohorts mature.

Great analysis. At every stage of the whole AI cycle, whether it is LLM developers, infrastructure providers or wrapper developers everyone is talking about gross margins above 40 percent.

Looks similar to the pharmaceutical industry where every piece in the value chain talks about 75 percent gross margins.

Maybe when you showcase as higher value the targeted gross margins would also be higher. Unlike pharma...not sure how long will AI sustain these margins.

What if AI kills AI's margins