Hi friends,

There’s been a lot of hot takes about the “end of Google” with the rise of ChatGPT and similar products. In this piece, I’ll discuss that topic, focusing on the impact of Generative AI (and foundation models more broadly) to Google.

I’ll discuss the following:

How Generative AI might impact Search Queries

How Google might respond

Generative AI and Search Queries

There are three ways I see Generative AI and Foundation Models more broadly impacting the volume of Google Search Queries.

I. GPT-3 Generated Content Crowds out Search Results

With OpenAI’s GPT-3 and other similar models, the cost of creating relatively human-readable text-based content essentially goes to ~0. With that, I expect to see much more AI-generated content created to rank for the target keywords in search engines.

Given that AI can create content that is pretty well-tailored to and optimized for target keywords, it’s very possible that this content does well in search rankings and could crowd out human-created content.

In some cases, that may be okay since that AI-generated content could still answer the searcher’s questions. But in other cases, users may start to find the search results unsatisfactory, and have to rely on other sources where they feel like user-generated content is more prevalent.

Curation and going direct to trusted sources may become more critical, with places like TikTok, Reddit, and Instagram benefiting. Indeed, in many cases, many users start their searches at places like these already, with 40% of Gen Z starting searches on TikTok rather than Google.

II. GPT based Chat-based answers eat into Traditional Search

We’ve seen with ChatGPT’s recent uptake and success, that generative AI-based search engines, in a chat or conversational type interface could beat out Google and traditional search engines on certain types of queries. While I think the idea of them disrupting Google by themselves is a bit far-fetched, here are some benefits of this type of search engine:

conversational based (can ask questions and get answers similar to chatting with a smart friend)

able to synthesize from multiple sources

able to directly answer the question as asked by the user (as opposed to Google which always just returns potential links or exact match text in their knowledge graph).

An example is this query below about important inventions, which ChatGPT answers exactly as the user wanted, but Google just suggests some links, many of which may not have the answer.

While ChatGPT will continue to improve, probably adding elements such as being able to connect to the internet to access real-time information and also perhaps linking to sources, it still won’t be able to compete for all kinds of queries but could compete for more science/math based and fact/historical based queries and creative queries.

One benefit for Google is that some of the most commercial queries – related to finding products and services (i.e., eCommerce, Travel, Healthcare Services) may be some of the hardest for ChatGPT-type products to compete with.

If you don’t yet receive Tanay's newsletter in your email inbox, please join the 5,000+ subscribers who do:

III. Copilots reduce the need for search

One new product type we’re going to see more of are “co-pilots” or essentially AI assistants to help users with specific tasks built into the places where they tend to be performing these tasks.

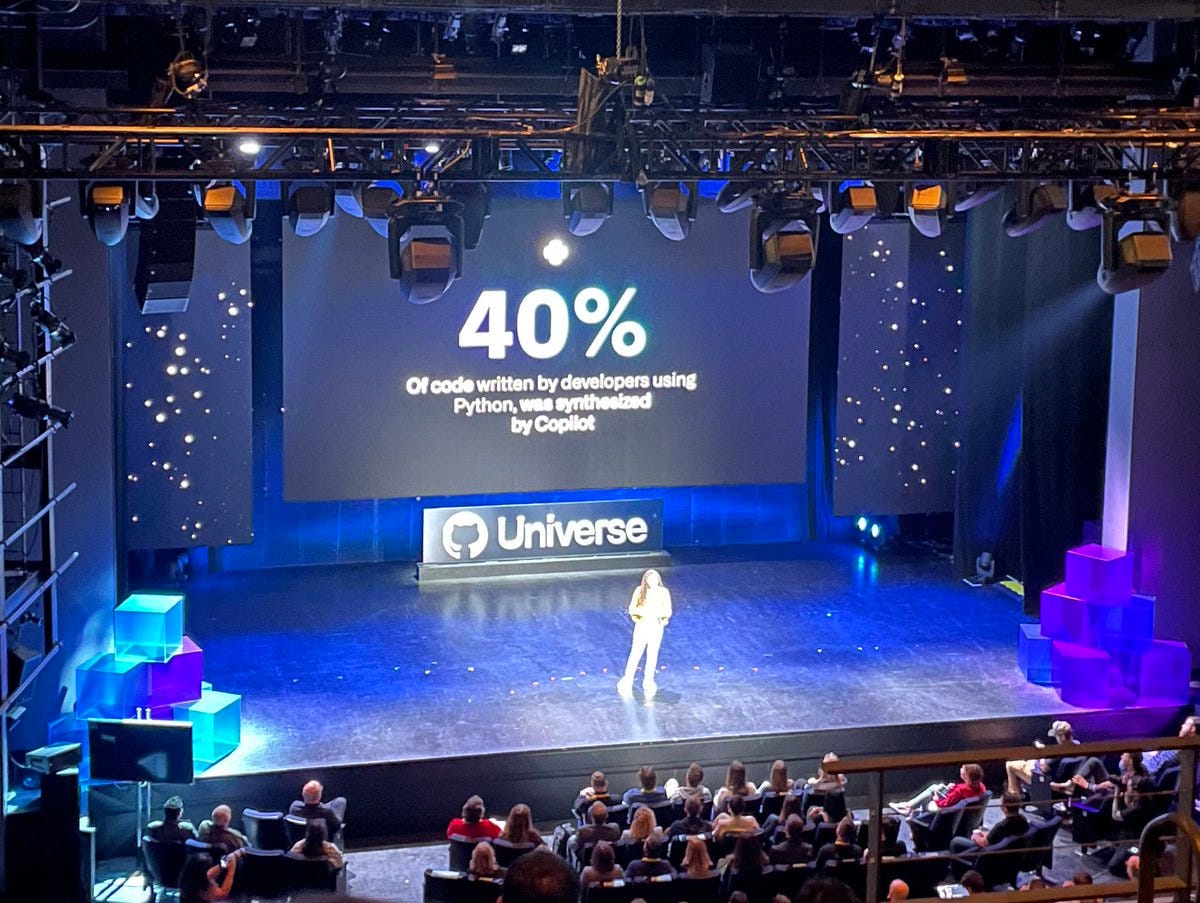

For example, GitHub Co-pilot, for some users can write up to 40% of code. By helping programmers while they code, it in some sense reduces the need for them to search Google for things they might have otherwise needed help on.

Now, as just one example, this is definitely a small set of queries, but if you imagine that there ends up being Co-pilots across many pieces of software (Spreadsheets, etc) as well as for many different jobs (Lawyers, Designers), that reduces the need for searches related to using specific tools or about terms of terminology with the job.

Overall, it’ll still be a small bucket in terms of relative impact to aggregate Google search queries, but it’s worth pointing out.

IV. Image Generation eats into Image Search

With image generation models such as Dall-E and Stable Diffusion, users can create the exact images they need rather than using image search to find specific images.

As prompting continues to improve and costs go down, it’s very possible that for a lot of image search use cases people just generate a unique image for themselves, impacting search volume.

However, image searches where people are trying to reference a source image or specific artifact (specific products, specific logos) and real-world events/people will continue.

In addition, since image search, today is not very heavily monetized, and in fact, the queries that are monetized are probably less susceptible to replacement via image generation (as in the example below), the impact on Google’s actual business may be minimal.

How Google Might Respond

Of the things I’ve identified above, the two biggest risks are Google’s search results being drowned in AI-generated content, and Google’s search results unable to compete with GPT-based models. I can see Google doing a few things to respond:

Integrating LLMs

Google is no stranger to large language models. It has trained a 540B parameter LLM called PaLM (Pathways Language Model). For comparison, GPT-3 has 175B parameters.

Additionally, Google also has chat-like technology called LaMDA, which stands for Language Model for Dialogue Applications.

Today, the main hindrance to integrating it into Google search is likely the inference costs. Given Google likely has somewhere on the order of ~10B searches a day, even an inference cost of 0.01, when used across all searches to generate an LLM-based result, would cost Google $1M/day.

The other related element is the latency of the typical Google Search results vs these LLMs.

For large-scale integration, latency and cost still need to improve. However, I imagine Google will slowly integrate LLMs into search results in some form, perhaps starting with certain types of searches and focusing on improving their “knowledge panel” which shows a summary result of a search query about a person/place/thing with LLMs (and indexing that).

Additionally, the other critical thing Google will be weary of is that LLMs can tend to hallucinate. Due to that, it will likely be very restrictive about what queries it shows up on and prefer to perhaps use them to suggest offline improvements to knowledge panels rather than dynamically generate these results for absolutely any query without knowing whether they’re truly accurate.

“We are absolutely looking to get these things out into real products and into things that are more prominently featuring the language model rather than under the covers, which is where we’ve been using them to date,” Dean said. “But, it’s super important we get this right.” – Jeff Dean, Head of Google AI

Upranking User Generated Content

Google may also take more steps to favor content generated by humans or at least that is unique than other content to prevent ranking AI-generated SEO farms too highly.

Some of the things they could do are:

Putting more weight on the performance of links and content on social channels and/or on actual Search Engine Result Pages (i.e., if people keep bouncing off a link, penalize it more strongly)

Favoring User Generated Content that has already been validated by other users (content on TikTok, Substack, Reddit, Twitter, etc which has been popular on that platform)

Creating or leveraging algorithms that indicate whether a piece of content was AI-generated and potentially downranking those slightly

Favoring more unique content (with the hypothesis being that AI-generated content will conflict/be duplicative over time on the same topic given the way the models work)

Investing Further in Semantic Search

Google does use semantic search, but today many results are still very heavily keyword-based. Google continuing to invest in semantic search to better understand what a user might have been trying to convey from a query and finding search results that may have an answer even if specific words aren’t mentioned will continue to improve the quality of search results.

This is particularly important given that so many queries have literally never been searched for before, as below. In these cases, Google doesn’t have prior behaviors for this same query to go off.

Closing Thoughts

I think Generative AI poses a threat to Google, but much less so than some of the “Google is going to die” takes might suggest. Google still holds the distribution advantage, and while Google may lose some queries over time, many of the best monetizing queries are going to be hard for LLM-based products to replace. In addition, Google can layer in answers from such models into their core offerings over time and so more than a disruptive threat, I think it’ll force them to evolve their offering over time.

Thanks for reading! If you liked this post, give it a heart up above to help others find it or share it with your friends.

If you have any comments or thoughts, feel free to tweet at me.

If you’re not a subscriber, you can subscribe for free below. I write about things related to technology and business once a week on Mondays.