Considerations for AI-Native Startups

How to think about moats, defensibility, and more when building with LLMs

As large language models have exploded over the past year, numerous startups have begun to build AI-native applications to disrupt industries. There's been a lot of talk about how many of them are simply “wrappers on OpenAI” as part of discussions about moats and defensibility. That's what I'll be going into today.

As these startups build AI-native applications with LLMs, there are a few considerations for them to think about which broadly relate to product approach as well as how to build defensibility vis-a-vis the models and incumbent applications.

Generally, the four considerations below can be thought about as determining two things:

(First two) Does the startup provide enough value on top of the model layer to not get commoditized by it?

(Last two) How and on what basis does the startup compete with other applications, including incumbents?

1/ How much "AI value" does the startup provide on top of foundation models?

The vendors and models that most of these new startups (and incumbents) use for their application are the same – namely – OpenAI, Cohere, and Anthropic among others. However, startups may end up using them in slightly different ways.

At a high level, the range of options for startups are (from easiest to hardest)

Prompt Engineering only: Focus on improving the model output by just engineering the prompts used in the models and by potentially selecting different vendors for different prompts

Fine-tuning: Improve the model by fine-tuning it with feedback and input/output data from a dataset or real usage

Train own models: Over time, potentially train very specialized models for specific use cases using all the data collected from the application in production.

It's very possible that for horizontal use cases (e.g. general text generation for marketing, sales, regular content, business writing, etc), the value of prompt engineering and fine-tuning are minimal in the medium term, and that own models also beat out by the LLMs, especially the newer versions. For example, GPT-4 might render all the fine-tuned models companies might have developed for sales email writing, and blog posts, potentially irrelevant, making it easy for another startup that is just a wrapper to GPT-4 to offer the same quality as your startup might have built up.

On vertical use cases (e.g., contract writing in legal, financial analysis, etc), there may be more lasting value in either heavily fine-tuned models or even own models, but that is also just very dependent on how good say GPT-4 might be out of the box.

2/ How important is private data/customer-specific data to the use case?

If all the data needed for a use case is largely public, then that limits some of the value on top that a startup can provide. For example, to generate relatively basic explainer essays, IG captions, marketing content, etc, one doesn't need too much proprietary data, and it's hard to go beyond what GPT-4 will produce out of the box.

However, if all the data isn't public and needs a startup to connect to a customer's other applications or warehouse to use, then it becomes clear that commoditization from startups building "model wrappers" is unlikely since the models won't get access to specific customer's data – an application is definitely needed to do that.

For example:

In customer support, private data is very important: there's only so much that the AI can do without access to the company's knowledge base, FAQs, past tickets, etc.

In image and text generation used in marketing, private data is only somewhat important: having data about the company's tone, formats, templates and other guidelines that can then be fed to the LLMs can improve the quality of the output. The higher the improvement with the private data, the less risk of the value being captured all the model vendors, since the startup is the one getting access to the private data.

In categories where private data is needed, there is less risk of all the value being captured directly by the models. To be clear, even in ones that require private data, many application companies may pop up in the same space, but all of the application companies will have some benefit of workflow/switching cost for the customers they serve, that will prevent them from being fully commoditized by the models, at least.

Note that this is less about the difficulty of using LLMs with private data (companies like Pinecone, Langchain, and others are making that easier over time), but that by definition you have to win the customer and integrate their data to actually provide the full value, which the model vendors are unlikely to do at scale by themselves (and so there will be an application vendor needed to create the value).

3/ What happens if/when incumbents add Generative AI into their product?

Depending on the category that new startups are building in, many of the incumbents are likely to be at least somewhat adaptable and see the impact that AI can have in their market.

That, coupled with the relative ease of using these models (directly via an API), especially when it comes to standard tasks such as text/image generation, means that at least light integrations of these are likely from incumbents.

We've already seen many examples of incumbents reacting across categories:

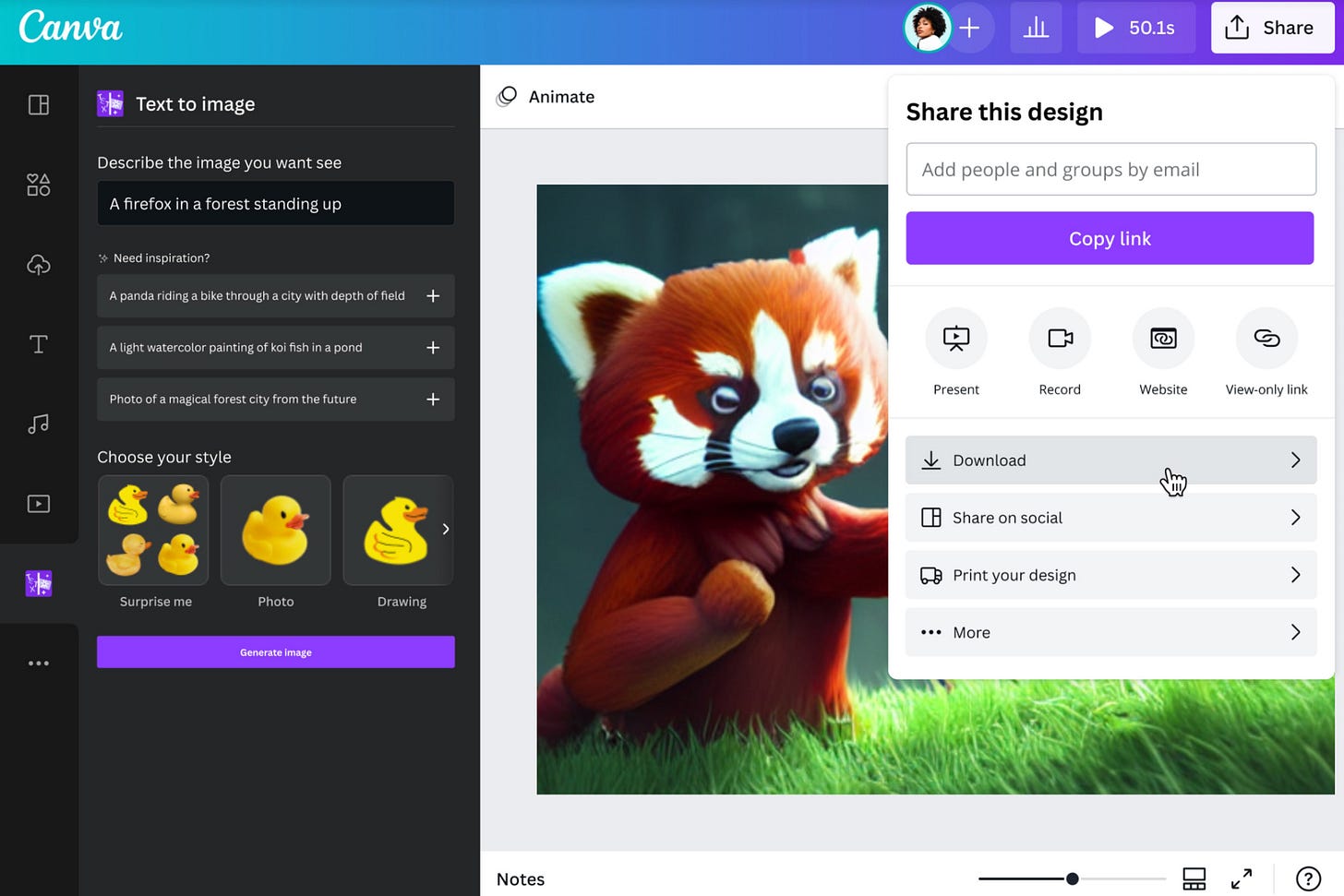

Design: Canva has added image generation functionality into their broader design product.

Customer Support: Intercom has added LLM-powered AI-customer support features such as summarization, composing, and rephrasing.

GTM: Outreach has added an AI-powered smart assist feature. Walnut has launched Walnut Ace, an OpenAI API-powered personalized demo product. Salesforce has previewed the launch of EinsteinGPT which helps generates leads and close deals.

Productivity: Microsoft has basically said they expect to add generative AI into the entire office suite, Notion has launched Notion AI and Google expected to do the same in products like Google docs and Gmail.

Given that incumbents are likely to integrate Generative AI, at least at a relatively basic level, new startups building in these categories will have to think about how they can add value beyond what incumbents can easily do. If not, a lot of the value of AI in that category might accrue to the "incumbents" today in that category.

At a high level, if building a product from the ground up in a category can allow for much deeper and better use of AI than simply adding it on as a feature/new product line, new startups may be well positioned.

4/ Should you bring AI into an existing workflow or redo the workflow with AI?

This is very tied to the above consideration and is one that is more applicable to certain categories than others.

In some cases, startups may decide that the best approach is to focus on doing the AI part best while fitting into the workflows/applications companies already use.

For example:

Diagram is building a co-pilot for design leveraging AI in the form of a Figma plugin.

Arcwise is building a plugin for Google Sheets to add AI features such as formula suggestions and construction.

In these cases, the companies are bringing AI into the existing tool/workflow, rather than creating a new tool from the ground up. While sometimes these businesses seem a bit niche, the approach can serve as a good wedge to then expand further. In addition, there have been numerous examples of large companies built as say plugins in Powerpoint (ThinkCell) and other products. But the risk they face is that the incumbents may integrate these indirectly, in which case they will always need to be multiple times better to stay ahead or relevant.

The other option is to use AI to reimagine a workflow from the ground up as part of a broader product offering. In these cases, companies are thinking about what the workflow might look like if AI was deeply integrated, which can be harder to build as a plugin.

For example:

AI-first storytelling tool Tome is essentially rebuilding a slide software from the ground up with AI at its core, rather than say building a plugin for Google Slides.

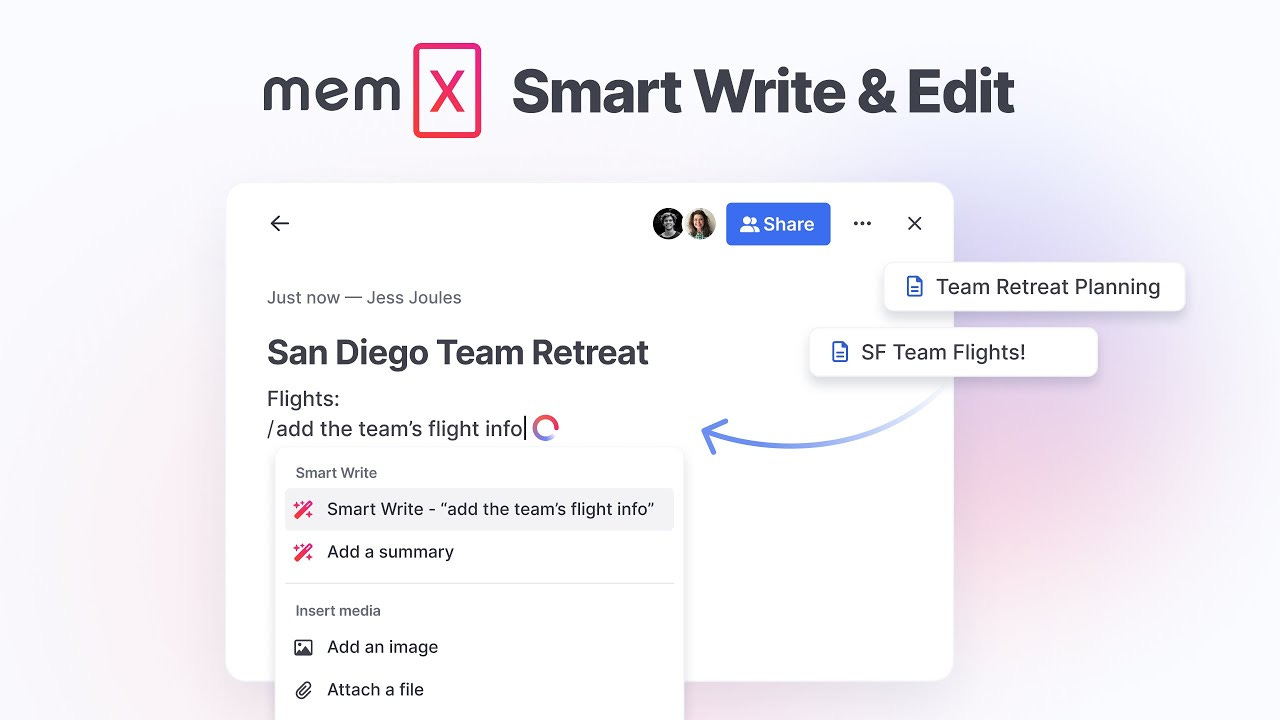

Companies like Mem and Lex are trying to reimagine knowledgebase and text editing apps respectively from the ground up with AI at their core, rather than serving as a way to get the AI output to export into another primary tool.

In these cases, the issues are slightly different such as requiring changes in workflows from customers and a more ambitious and broader product build beyond just the AI features.

An important note is that this is more of a spectrum than a hard choice, and there isn’t one right choice. For example, take the UI design space: Diagram is building a plugin within Figma, Galileo is building a standalone application that uses AI to generate an interface design that can be edited in Figma, Uizard is building a standalone AI-powered design product that can essentially replace Figma for some designers.

Similarly, products like Jasper started off as standalone products in the existing workflow. The process was roughly: Generate the text in Jasper and then make your edits and copy and paste it into wherever you need such as your content management system. Now, it’s moving towards being more deeply integrated in, but in a way that also changes the workflow – Jasper becomes where writing happens and then gets scheduled into the CMS, it can over time suggests the right cadence based on the past and the goals and so on.

Closing Thoughts

If I had to re-frame and summarize this in terms of what new startups building should keep in mind, it would be:

Add AI value on top of the LLMs for the use case through prompt engineering and fine-tuning, but think about whether it will matter for the use case once models improve

Incorporate private data/customer data in the model context to improve outputs

Assume that incumbents in your space will at least adopt surface-level generative AI features and think about how you can go beyond those.

Think about the right insertion point for your product and try to go deep into workflows while minimizing disruptions but bringing out the full value of AI.

Thanks for reading! If you liked this post, give it a heart up above to help others find it or share it with your friends.

If you have any comments or thoughts, feel free to tweet at me.

If you’re not a subscriber, you can subscribe for free below. I write about things related to technology and business once a week on Mondays.

Great article. Curious about your thoughts on Jasper AI?