This is a weekly newsletter about the business of the technology industry. To receive Tanay’s Newsletter in your inbox, subscribe here for free:

Hi friends,

This week, I’ll be rounding up what all the big tech companies are up to in Generative AI, based on some of the discussions in their earnings calls for Q2 that were in the past couple of weeks.

Any quotes below unless otherwise noted are directly from the company’s CEO.

Amazon

Amazon views the LLM stack as having three layers and is investing heavily in all 3. The three layers are (i) compute for training and inference of LLMs, (ii) LLMs as a service and (iii) applications built on top

Compute

Amazon makes NVIDIA H100 GPUs available via its EC2 P5 instances, but has also been investing in its own chips, Tranium and Inferentia which are now in its second version and offer an option that is appealing from a price/performance perspective for customers. Amazon didn’t share any stats regarding uptake yet.

LLMs as a service

Amazon Bedrock offers LLMs as a service to the vast majority of companies that don't want to train their own models and that is exactly what Amazon Bedrock aims to solve. It supports Anthropic, Stability AI, AI21 Labs, Cohere, and Amazon's own LLM called Titan. Customers using Bedrock include: Bridgewater Associates, Kota, Lonely Planet, Omnicom, 3M, Ryanair, Showpad, and Travelers.

Most companies tell us that they don't want to consume that resource building themselves. Rather, they want access to those large language models, want to customize them with their own data without leaking their proprietary data into the general model, have all the security, privacy, and platform features in AWS work with this new enhanced model, and then have it all wrapped in a managed service.

Applications

Amazon intends to build many applications itself, but recognizes that most will be built by other companies, but ideally on AWS. So far they’ve built Amazon CodeWhisperer, an AI-powered coding companion, which they believe is one of the early compelling GenAI applications, but there are many more likely to come:

Inside Amazon, every one of our teams is working on building generative AI applications that reinvent and enhance their customers' experience.

Microsoft

Satya Nadella talked about how one of Microsoft’s three key priorities is "investing to lead in the new AI platform shift by infusing AI across every layer of the tech stack”.

Microsoft touched on their AI investments across three main areas: Azure, Copilots and Bing.

Azure

Microsoft’s OpenAI Azure Service seems to have great momentum. More than 11,000 organizations across industries, including IKEA, Volvo Group, Zurich Insurance, as well as digital natives like FlipKart, Humane, Kahoot, Miro, Typeface use the service.

Microsoft is also investing in Azure OpenAI Studio to help organizations ground, fine-tune, evaluate, and deploy models and do so responsibly

So far, Microsoft believes that AI services is contributing to roughly 1 percentage point of the 26% growth in Azure and other cloud services, and it is helping drive more share and logo gains for their business.

I mean, if you think about Azure, we have grown Azure over the years coming from behind. And here we are as a strong No.2 in the lead when it comes to these new workloads. So, for example, we are seeing new logos, customers who may have used out of the cloud for most of what they do are, for the first time, sort of starting to use Azure for some of their new AI workloads. We also have even customers who've used multiple clouds who use for a class of sort of workloads also start new projects when it's transferred in data and AI, which they were using other So what I think you will see us is more share gains, more logo gains, reducing our CAC even. And so, those are the things of points of leverage.

Copilots

Microsoft has launched copilots in many of its products including its Office Suite, in Power Virtual Agents, Power BI and a Security Copilot.

The first of this kind, Github Copilot continues to be one of the strongest performing, with more than 27,000 organizations, up 2x Q/Q using Github Copilot for Business.

The Microsoft 365 Copilot which will be priced at $30/user/mo isn’t generally available yet, but Microsoft underscored their excitement about the customer reaction as well as the interest in being in the paid preview.

Nearly 90% of GitHub Copilot sign-ups are self-service, indicating strong organic interest and pull-through. More than 27,000 organizations, up 2x quarter over quarter, have chosen GitHub Copilot for Business to increase the productivity of their developers, including Airbnb, Dell, and Scandinavian Airlines.

Bing and Search

Bing is the default search experience for OpenAI's ChatGPT. In addition, Bing’s own chat has been used over 1 billion times (in terms of number of conversations)

Microsoft Edge continues to take share in the browser market for the ninth consecutive quarter in large part because of its AI-powered features.

Microsoft also intends to launch a Bing Chat Enterprise soon which offers commercial data protection and allows enterprises easily use this technology.

Meta

Meta believes that the “two technological waves that we're riding are AI in the near term and the metaverse over the longer term”.

On the AI front, they're investing across four main areas: infrastructure, recommendation systems, ad products, consumer products.

Recommendation Systems

While this has always been an investment area for Meta, larger models are helping them improve their recommendations and see gains in time spent and engagement.

AI-recommended content from accounts you don't follow is now the fastest growing category of content on Facebook's feed. Since introducing these recommendations, they have driven a 7% increase in overall time spent on the platform.

Infrastructure

A few weeks ago, Meta made its Llama 2 model available open source for commercial use, partnering with Microsoft to do so. It follows them open-sourcing PyTorch and models such as Segment Anything, ImageBind and Dino.

Given that they don’t have a cloud platform and are “playing a different game”, we can expect to see them continuing to open source more things.

We've found that open sourcing our work allows the industry, including us, to benefit from innovations that come from everywhere. And these are often improvements in safety and security, since open source software is more scrutinized and more people can find and identify fixes for issues. The improvements also often come in the form of efficiency gains, which should hopefully allow us and others to run these models with less infrastructure investment going forward.

Ad Products

Meta is investing in leveraging AI to improve the experience and ROI for advertisers using their platform.

They’ve deployed Meta Lattice, a new model architecture that learns to predict an ad's performance across a variety of datasets and optimization goals.

They also have launched AI Sandbox, a testing playground for generative AI-powered tools which advertisers can use to create their ads.

Already, most of their advertisers are using at least one of their AI-driven ad products such as Meta advantage, their automated ad products.

Consumer Products

So far, we’ve not seen much in the form of Generative AI in any of Meta’s core apps. But Zuckerberg hints that they’re coming soon and he’ll share more details later this year. It seems like it will take two forms initially:

Creative tools that make it easier and more fun to share content

Agents that act as assistants, coaches, or that can help you interact with businesses and creators, and more

We're also building a number of new products ourselves using Llama that will work across our services. I'm going to share more details later this year, but you can imagine lots of ways AI could help people connect and express themselves in our apps: creative tools that make it easier and more fun to share content, agents that act as assistants, coaches, or that can help you interact with businesses and creators, and more. These new products will improve everything that we do across both mobile apps and the metaverse -- helping people create worlds and the avatars and objects that inhabit them as well.

Alphabet

Sundar Pichai continued to call out how important AI is to Alphabet, noting that “this is our seventh year as an AI-first company”.

Alphabet talked about their investments in AI along three key areas: search, productivity and creativity, and cloud infrastructure.

Search

Google search is arguably the best business created in the history of capitalism, generating $150B+ in revenue per year.

GenAI probably brings one of the biggest changes Google has made to search in over a decade — announcing Search Generative Experience, which uses LLMs to more naturally and directly answer user queries.

It can better answer the queries people come to us with today, while also unlocking entirely new types of questions that Search can answer.

For example, we found that generative AI can connect the dots for people as they explore a topic or project, helping them weigh multiple factors and personal preferences before making a purchase or booking a trip.

Google launched it in beta in May, and has been working on improving latency and accuracy of the results, and are also working on new ad formats to let advertisers leverage these results.

We are testing and evolving placements and formats and giving advertisers tools to take advantage of generative AI.

The big question on everyone’s mind is when this rolls out in Search fully and what the impact will be. Sundar Pichai was tight-lipped on the exact timeline, but said they’ll continue to expand users:

So I would say, we are ahead of where I thought we would be at this point in time, and the feedback has been very positive. We’ve just improved our efficiency pretty dramatically since the product launched. The latency has improved significantly. We are keeping a very high bar, but I would say we are ahead on all the metrics in terms of how we look at it internally, and so couldn’t be more pleased with it.

And so you will see us continue to bring it to more and more users, and over time this will just be how Search works. And so while we are taking deliberate steps, we are building the next major evolution in Search, and I’m pleased with how it’s going so far.

Productivity

Productivity is another big area of investment for Google.

Google announced Bard in March and has continued to expand access and accessibility and make it more personalized. Anecdotally, Bard has improved significantly since its launch and is now better than ChatGPT for some types of queries.

Google’s version of Copilot in their productivity suite, Google Duet, is now available to over 750K users and allows users to collaborate with AI in their coding, writing, and other tasks.

Cloud

Google also boasted about how their AI-optimised infra is allowing them to win new customers and grow their TAM.

On the training and compute side, more than 70% of GenAI unicorns use Google Cloud including Cohere, Jasper, Typeface, and many more. Additionally, Google offers what they say is the widest choice of AI supercomputer options with Google TPUs and advanced Nvidia GPUs.

Google now supports over 80 different models including third party and popular open source in their Vertex, Search, and Conversational AI platforms.

They’re seeing strong growth so far — the number of customers leveraging the above AI platforms grew more than 15X from April to June.

Apple

Last but not least, let’s talk about Apple. Surprisingly (or not), Apple didn’t have much discussion about AI in their initial prepared remarks.

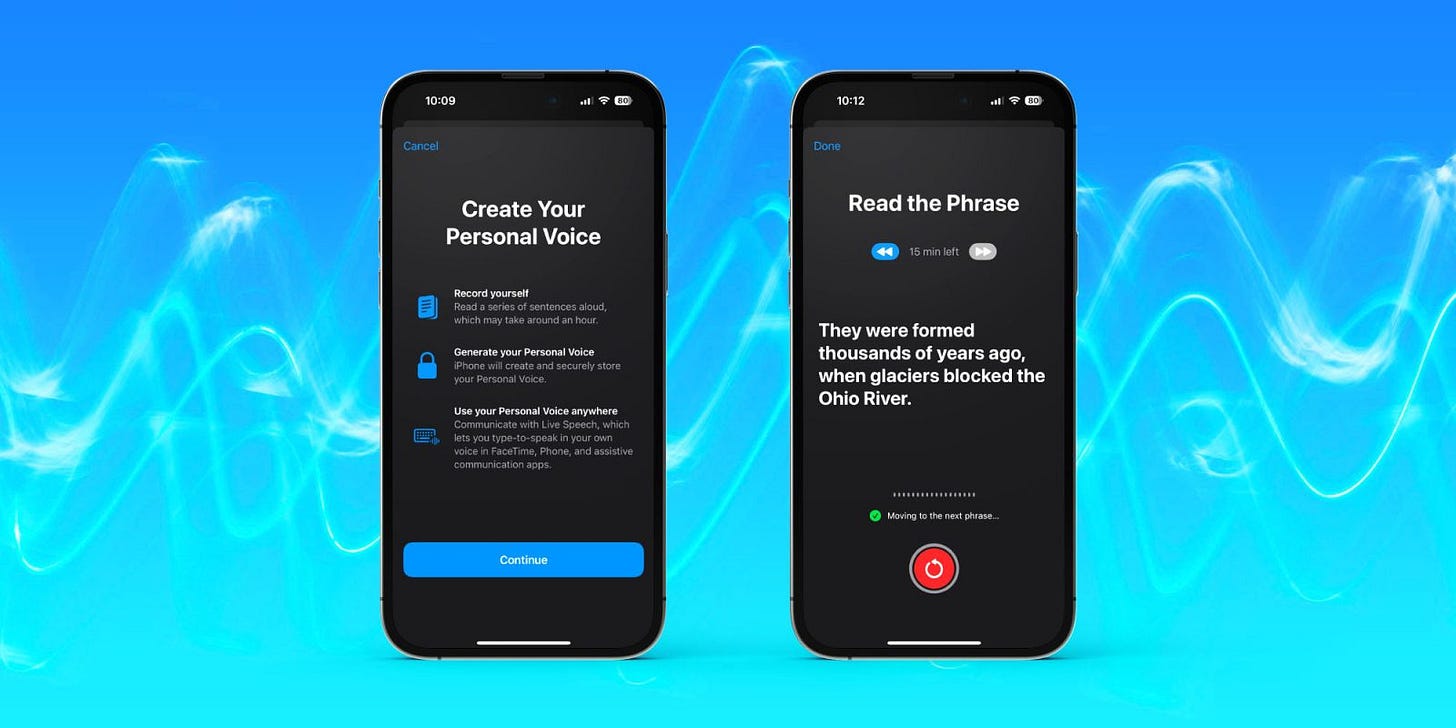

When asked about AI, Tim Cook said that AI and ML are core to their products and pointed to their WWDC announcements of AI features coming to iOS 17 such as Personal Voice and Live Voicemail. In addition, he also said that they like to announce things when it comes to market, so we’ll have to wait a bit longer.

If you take a step back, we view AI and machine learning as core fundamental technologies that are integral to virtually every product that we build. And so if you think about WWDC in June, we announced some features that will be coming in iOS 17 this fall, like Personal Voice and Live Voicemail. Previously, we had announced lifesaving features like fall detection and crash detection and ECG. None of these features that I just mentioned and many, many more would be possible without AI and machine learning. And so it's absolutely critical to us.

And of course, we've been doing research across a wide range of AI technologies, including generative AI for years. We're going to continue investing and innovating and responsibly advancing our products with these technologies with the goal of enriching people's lives. And so that's what it's all about for us. And as you know, we tend to announce things as they come to market, and that's our MO, and I'd like to stick to that.

Thanks for reading! If you liked this post, give it a heart up above to help others find it or share it with your friends.

If you have any comments or thoughts, feel free to tweet at me.

If you’re not a subscriber, you can subscribe for free below. I write about things related to technology and business once a week on Mondays.

Thank you for the updates! Very helpful!

Very informative!