Big Tech and Generative AI Q3 '24 Update

How Amazon, Apple and Google's Generative AI investments are going

This is a weekly newsletter about the business of the technology industry. To receive Tanay’s Newsletter in your inbox, subscribe here for free:

Hi friends,

This week, I’ll be rounding up what some of the big tech companies are up to in Generative AI, based on some of the discussions in their earnings calls for Q2 that were in the past couple of weeks. I’ll cover Amazon, Apple and Google today, and cover Meta and Microsoft next week.

Any quotes below unless otherwise noted are directly from the respective company earnings call, and are from the CEO, unless otherwise noted.

Amazon

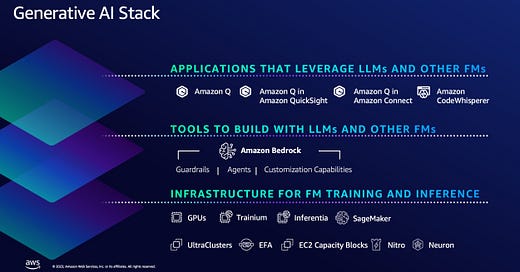

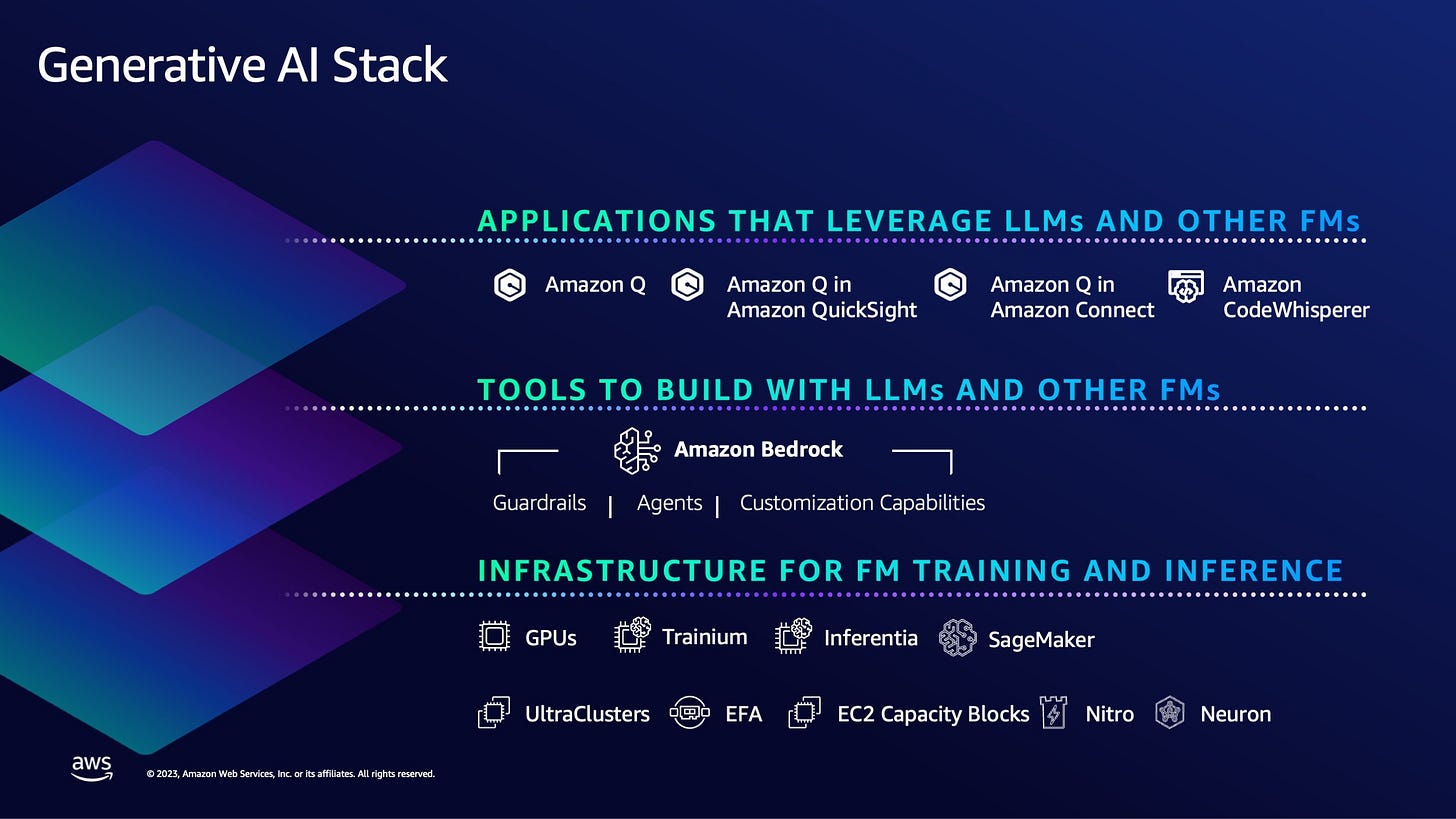

Amazon thinks of their AI offering as macro layers of the stack: i) the foundational layer of infra for model builders, ii) the platform for developers that customize or use models and deploy applications and iii) the application layer where they develop end-to-end AI applications as well as incorporate AI into their products.

At an overall level Amazon’s AWS AI businesses is executing well, both in product velocity and early top line revenue scale and growth.

“In the last 18 months, AWS has released nearly twice as many machine learning and gen AI features as the other leading cloud providers combined. AWS's AI business is a multibillion-dollar revenue run rate business that continues to grow at a triple-digit year-over-year percentage and is growing more than 3x faster at this stage of its evolution as AWS itself grew, and we felt like AWS grew pretty quickly”

At the same time, they note that given industry and market dynamics, margins on the AI side are lower than the rest of AWS.

“It's moving very quickly and the margins are lower than what they -- I think they will be over time. The same was true with AWS. If you looked at our margins around the time you were citing, in 2010, they were pretty different than they are now”

Infrastructure Layer

Amazon continues to work closely with NVIDIA, is the first major cloud provider to offer NVIDIA’s H200 GPUs. At the same time, they’ve invested in their own chips, Trainium and Inferentia. The second version of the former is starting to ramp up in the next few weeks and Amazon notes significant demand for them.

“As customers approach higher scale in their implementations, they realize quickly that AI can get costly. It's why we've invested in our own custom silicon in Trainium for training and Inferentia for inference. The second version of Trainium, Trainium2 is starting to ramp up in the next few weeks and will be very compelling for customers on price performance. We're seeing significant interest in these chips, and we've gone back to our manufacturing partners multiple times to produce much more than we'd originally planned.”

Platform Layer

Amazon continues to invest in Bedrock, which allows developers to customize, fine-tune and leverage foundation models to build applications. It now boatsts modules for evals, guardrails, RAG and agents, and the broadest selection of foundation models, and enable easy orchestration.

“Recently, we've added Anthropic's Claude 3.5 Sonnet model, Meta's Llama 3.2 models, Mistral's Large 2 models and multiple stability AI models. We also continue to see teams use multiple model types from different model providers and multiple model sizes in the same application. There's mucking orchestration required to make this happen. And part of what makes Bedrock so appealing to customers and why it has so much traction is that Bedrock makes this much easier.”

Application Layer

Amazon notes it’s Amazon Q software development assistant as having the highest code acceptance rates in the industry and reshared the anecdote of Q Transform which helps with code modernization, which helped Amazon migrate 30,000 Java applications.

“Q Transform saved Amazon's teams $260 million and 4,500 developer years in migrating over 30,000 applications to new versions of the Java JDK.”

Amazon also continues to invest in using GenAI across all aspects of their business, noting hundreds of apps in development or launched.

On the retail side, they’ve launched improvements to their assistant Rufus for consumers and for sellers, they’ve launched Project Amelia is an AI system that offers tailored business insights to drive growth.

Lastly, another area Amazon expects to see a lot of impact from AI is through improved robotics in their fulfillment network, while acknowledging it’s very early there.

“We really do believe that AI is going to be a big piece of what we do in our robotics network. We had a number of efforts going on there. We just hired a number of people from an incredibly strong robotics AI organization. And I think that will be a very central part of what we do moving forward, too.”

Alphabet

While many in tech note the increasing pressure Google is facing in it’s search business from upstarts such as Perplexity, they’re not really feeling it on their top line yet, with search revenue continuing to remain strong.

AI in Search

Google has started to integrate AI overviews into Google search in a meaningful way, and has now rolled it out to a meaningful audience. In addition, it is changing the way people using Google, since they can now asak longer and more complex questions.

“Just this week, AI overview started rolling out to more than 100 new countries and territories. It will now reach more than 1 billion users on a monthly basis. We are seeing strong engagement, which is increasing overall search usage and user satisfaction. People are asking longer and more complex questions and exploring a wide range of websites. What's particularly exciting is that this growth actually increases over time as people learn that Google can answer more of their questions”

Google didn’t disclose any meaningful numbers associated with the product, but did share that they have reduced the cost per query to support these AI overviews by 90%

“In 18 months, we reduced cost by more than 90% for these queries through hardware, engineering and technical breakthroughs while doubling the size of our custom Gemini model”

Gemini Everywhere

Google is leveraging Gemini as the foundation for their AI features across their products, and Gemini continues to see strong pull both internally and externally.

On the internal front, all seven of Google’s products with 2B users now use Gemini.

“Today, all 7 of our products and platforms with more than 2 billion monthly users use Gemini models, that includes the latest product to surpass the 2 billion user milestone Google Maps”

On the developer front, Google is seeing continued growth of their enterprise AI platform, Vertex, and adoption of Gemini with a 14x increase in API usage over the last 6 months.

“Gemini API calls have grown nearly 14x in a 6-month period. When Snap was looking to power more innovative experiences within their my AI chatbot, they chose Gemini's strong multimodal capabilities”

And the next generation of Gemini is in the works.

“We've had 2 generations of Gemini model. We are working on the third generation, which is progressing well. And teams internally are now set up much better to consume the underlying model innovation and translate that into innovation within their products”

Infrastructure Investments

Google continues to invest in the infrastructure to power Ai across data centers, chips, fiber and energy, with quarterly capex spend of $13B, the bulk of which is technical infra spend. Of that techincal infra spend 605 is on servers (GPUs/TPUs) and 40% on data centers and networking equipment, and expects to continue investing at this pace.

“We will be investing in Q4 at approximately the same level of what we've invested in Q3, approximately $13 billion. And as we think into 2025, we do see an increase coming in 2025.”

As it relates to chips, they are now on the sixth generation of TPUs known as Trillium and are continuing to drive performance with them, while also offering other options such as NVIDIA GPUs to their cloud customers. When prompted on the efficiency advantages of TPUs, Sundar pointed to the pricing they have been able to deliver on their Flash models:

“If you look at the flash pricing, we've been able to deliver externally, I think and how much more attractive it is compared to other models of that capability. I think probably that gives a good sense of the efficiencies we can generate from our architecture.”

Internal Use for Coding

Lastly, one other comment from Sundar which made waves on the call was how effective AI has been internally for code generation, noting that now 25% of new code was written by AI. My caveat on this is that technically code suggestions similar to typeaheads of the past are likely also being counted :)

“We're also using AI internally to improve our coding processes, which is boosting productivity and efficiency. Today, more than 1/4 of all new code at Google is generated by AI, then reviewed and accepted by engineers. This helps our engineers do more and move faster. I'm energized by our progress and the opportunities ahead, and we continue to be laser-focused on building great products.”

Apple

Many argue Apple has been late to AI and maybe not done enough yet, often not even discussing AI much in previous earnings call. AI was discussed a bit more in this call, particularly with the recent launch of Apple Intelligence, which seems to be the cornerstone of Apple’s strategy.

The main goal of Apple’s AI features is to increase upgrade rates and reduce cycles between upgrades of the iPhone and other devices.

Apple Intelligence

Apple’s approach of a personal intelligent system built with privacy in mind is the key aspect of their AI play, and Apple intelligence finally rolled out last week.

Apple Intelligence marks the beginning of a new chapter for Apple Innovation and redefines privacy and AI by extending our groundbreaking approach to privacy into the cloud with private cloud compute. Earlier this week, we made the first set of Apple Intelligence features available in U.S. English for iPhone, iPad and Mac users with system-wide writing tools that help you refine your writing, a more natural and conversational Siri, a more intelligent Photos app, including the ability to create movies simply by typing a description, and new ways to prioritize and stay in the moment with notification summaries and priority messages.

Early signs point to a lot of interest in Apple Intelligence, especially if gauged on the basis of iOS software updates to get access to it, and believe it’s a compelling upgrade reason from a device perspective, though data on that is limited currently.

If you just look at the first 3 days, which is all we have obviously from Monday, the 18.1 adoption is twice as fast as the 17.1 adoption was in the year ago quarter. And so there's definitely interest out there for Apple Intelligence.

Tim Cook notes that there’s a lot more to come with Apple intelligence, including visual intelligence and the ChatGPT integration

Apple Intelligence, which marks the start of a new chapter for our products. This is just the beginning of what we believe generative AI can do, and I couldn't be more excited for what's to come.

AI investments

Reading between the lines based on the questions on the call, a few analysts were surprised at how low Apple’s investment in AI has been on a capex/R&D basis, and trying to understand whether a big jump in spend is likely, which Apple skirted but basically said probably not.

Relative to others, Apple hasn’t ramped up their capex materially (~10B/yr), and all indications on the call was that while they will continue to make investments in AI, didn’t note a large expected increase next year.

But obviously, we are rolling out these features, Apple Intelligence features already now. And so we are making all the capacity that is needed available for these features… in fiscal '25, we will continue to make all the investments that are necessary, and of course, the investments in AI-related CapEx will be made.

Similarly, in terms of R&D as a percentage of spend, Apple is roughly at 7-8%, and when asked whether this may go up, they responded that the AI focus has increased, but they’ve reallocated headcount towards AI.

As we move through the course of fiscal '24, we've also reallocated some of the existing resources to this new technology, to AI. And so the level of intensity that we're putting into AI has increased a lot, and you maybe don't see the full extent of it because we've also had some internal reallocation of the base of engineering resources that we have within the company.