This is a weekly newsletter about the business of the technology industry. To receive Tanay’s Newsletter in your inbox, subscribe here for free:

Hi friends,

This week, I’ll be rounding up what all the big tech companies are up to in Generative AI, based on some of the discussions in their earnings calls as well as various developer events and conferences they’ve held. A lot has changed since the prior roundup I did at the end of 2022.

Microsoft

Satya Nadella acknowledged Microsoft’s leadership position entering this new AI era, as Microsoft continued to make progress on AI products across all aspects of the stack.

With our leadership position as we begin this AI era, we remain focused on strategically managing the company to deliver differentiated customer value as well as long-term financial growth and profitability. As with any significant platform shift, it starts with innovation. And we are excited about the early feedback and demand signals for the AI capabilities we have announced to date.

Infrastructure

Training: Azure continued to take share and Microsoft’s AI infra is being use by OpenAI, NVIDIA, Adept, Inflection and others to train models.

Azure x OpenAI: Over 2500 customers are using Azure’s OpenAI service, which brings together GPT-4 and Azure. This was up 10x quarter over quarter (in number of customers), and likely is already close to a billion dollar run rate. Some customers include Coursera, Grammarly, Shell and Mercedes-Benz.

Developers:

Github Copilot: Over 10,000 organisations have signed up for Copilot for business including Coca-Cola, GM and others.

AI in Power Platform: Microsoft is bringing next-generation AI to Power Platform so anyone can automate workflows, create apps or web pages, build virtual agents and analyze data using only natural language.

Applications:

Copilot in Office: Microsoft has been encouraged by early feedback of Microsoft 365 Copilot across the office suite, which combines the power of AI with the data of the company in-context of the application and expects to roll it out in the coming months.

Bing / Edge: Powered by Bing Chat and other AI-features, Bing and Edge continued to grow share of the search / browser markets. Bing now has over 100M users.

AI in LinkedIn: Microsoft has begun to add AI-powered features into Linkedin, including writing suggestions for member profiles and job descriptions and collaborative articles.

Meta

Meta’s focused on their two main areas of AI work as below and their Open approach:

Recommendations/ranking work that powers their core products

The new generative models that are enabling new classes of products and experience

Recommendations and Ranking

This is work Meta has been doing for a while, but with better infra and models it continues to power improvement in the core product:

Organic Engagement: Since the launch of Reels, AI recommendations have driven a more than 24% increase in time spent on Instagram, and as much as 40% of the content people see is AI-recommended.

Monetisation: Through better ads ranking Reels monetization efficifency is up 30% and 40% quarter over quarter on Instagram and Facebook respectively.

New Experiences

While Meta didn’t highlight specific upcoming features and launches, Zuckerberg painted some ideas of what the teams are exploring, broadly in the overarching themes of connection and expression:

AI agents: Zuckerberg believes there’s an opportunity to introduce AI agents to billions of people in ways that will be useful and meaningful. Meta is exploring chat experiences in WhatsApp and Messenger for users, businesses and creators, and can see use cases including AI agents for business messaging and customer support.

Visual Creation: Meta is exploring visual creation tools for posts in Facebook and Instagram and ads, and over time video and multi-modal experiences as well. Over time, this could extend to the Metaverse too, to help people create avatars, objects, worlds, etc. As a preview of the visual creation featres, Meta already announced some of these visual creation tools for advertisers which allow for background generation, image outcropping and so on.

Open Approach

Zuckerberg wants to help create an open ecosystem around the AI models and tools. This isn’t just a philanthropic gesture: he believes that if the industry standardize on these open tools and improves them further, then it can help Meta’s products stay leading edge.

Meta has already open-sourced models such as Segment Anything, DinoV2 and released Llama to researchers, and expects to continue to do more on the infra front, noting that they’re playing a different game vs AWS/GCP/Azure:

And the reason why I think why we do this is that unlike some of the other companies in the space, we're not selling a cloud computing service where we try to keep the different software infrastructure that we're building proprietary. For us, it's way better if the industry standardizes on the basic tools that we're using and therefore we can benefit from the improvements that others make and others’ use of those tools can, in some cases like Open Compute, drive down the costs of those things which make our business more efficient too. So I think to some degree we're just playing a different game on the infrastructure than companies like Google or Microsoft or Amazon, and that creates different incentives for us. So overall, I think that that's going to lead us to do more work in terms of open sourcing, some of the lower level models and tools.

Amazon

Amazon shared a number of updates across their AWS products and applications around AI.

Infrastructure and Developers

About a month ago, Amazon announced a new set of tools for building with Generative AI on AWS. These include:

Chips: General availability of the next-gen of ML training and inference focused chips AWS trainium and AWS inferentia. Amazon counts startups AI startups, like AI21 Labs, Anthropic, Cohere, Grammarly, Hugging Face, Runway, and Stability AI as customers.

Foundation models as a service: Amazon is now making fundation models from AI21 labs, Anthropic, Stability and Amazon’s own Titan available via API, and allows users to fine tune them with data in S3.

Codewhisperer: A developer coding assistant which is free for individual developers and a competitor to Github Copilot.

Applications

Jassy mentioned that Amazon will build on top of large language models in every single one off their segments to reinvent customer experience – advertising, devices, stores, etc. One area that he was particularly excited about was Alexa, which should be improving soon:

But we have a vision, which we have conviction about that we want to build the world's best personal assistant.

And to do that, it's difficult. It's across a lot of domains and it's a very broad surface area. However, if you think about the advent of large language models and generative AI, it makes the underlying models that much more effective such that I think it really accelerates the possibility of building the world's best personal assistant. And I think we start from a pretty good spot with Alexa because we have a couple of hundred million endpoints being used across entertainment and shopping and smart home and information and a lot of involvement from third-party ecosystem partners.

And we've had a large language model underneath it, but we're building one that's much larger and much more generalized and capable. And I think that's going to really rapidly accelerate our vision of becoming the world's best personal assistant. I think there's a significant business model underneath it.

Google

In Google’s I/O conference last week, Sundar Pichai positioned Google as an AI-first company and said they have plans to reimagine all their core products with AI. We got a myriad of announcements, a few of which are covered below.

The upshot of them is that Google is taking this very seriously, and may have been asleep at the wheel, but is working hard to catch up, and might be very close.

Infrastructure

A number of companies use Google Cloud today including:

AI21 Labs, Replit, Midjourney use Google Cloud to train foundation models

Canva, Instacart and others are using Google’s cloud AI APIs to develop AI features.

Google made a number of announcements at Google I/O aimed to continue to attract developers to use their offerings. A few highlights include:

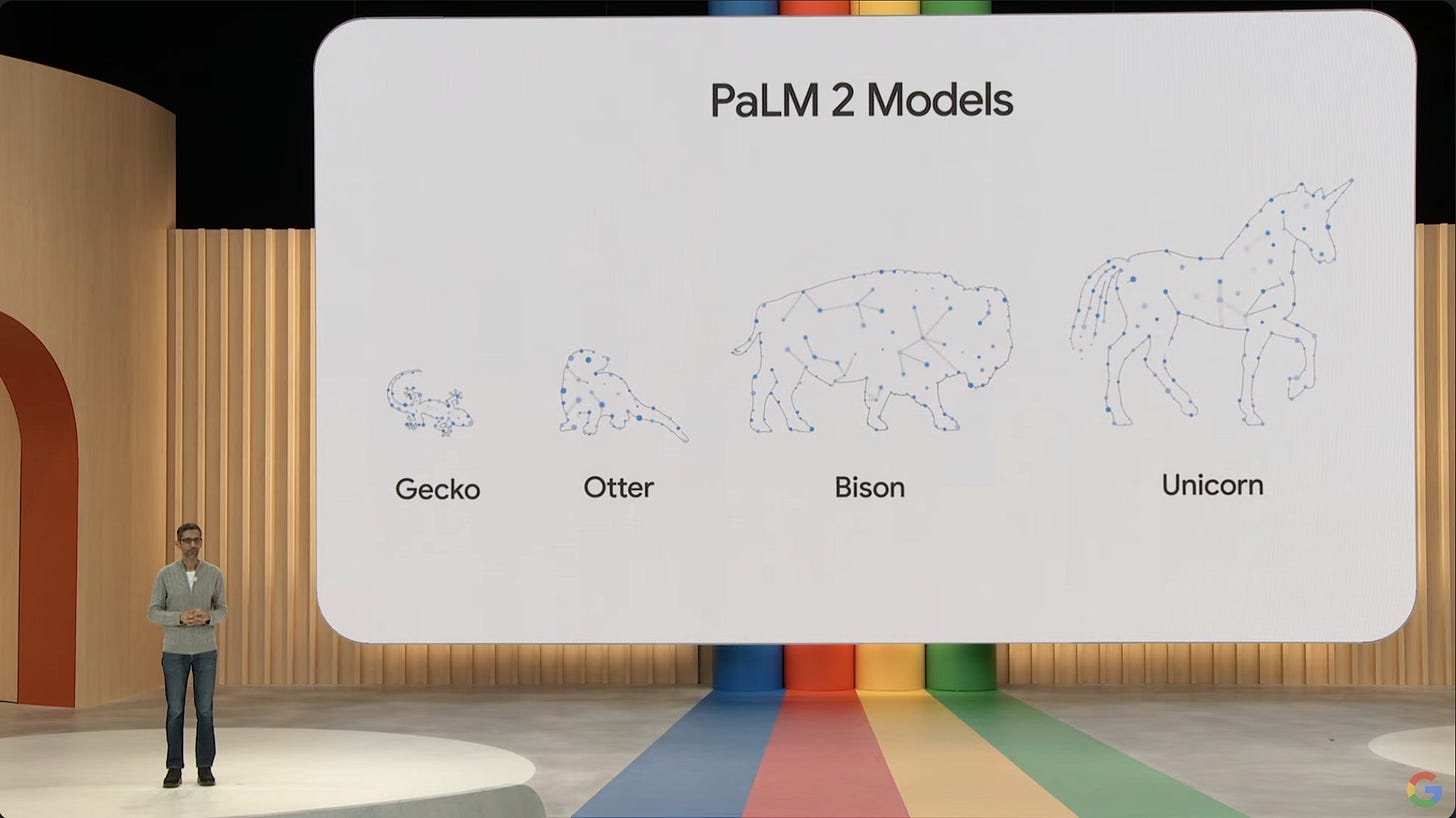

Next-gen LLM: Google announced PaLm2, their next generation LLM, which now powers a number of the AI features in their applications, and will be made available to developers over time. It comes in 4 sizes, with various latency/cost tradeoffs for each. Gecko for example, can run locally on a mobile phone.

More Foundation models: Google also announced some more foundation models and developer APIs its making available for developers:

Codey, a text-to-code foundation model

Imagen, a text-to-image foundation model

Chirp, a text-to-speech foundation model

Embedding API for text and images

They also teased Gemini, their next gen FM which is still in training but will be multimodal and built to enable future innovations like memory and planning.

Search and Chat

AI-answers in search: In what might be the biggest change to the UX of Google search since it started, Google is experimenting with Generative AI results in search, with ability to dig deeper and follow up. This could have huge implications for how companies get organic traffic in the future from Google.

Bard launches officially: Google improved the underlying model behind Bard and made it available to everyone. Bard supports over 40 languages now, and is able to browse the web. Google is also adding “tools”, the equivalent of Plugins in ChatGPT, and allowing for including images in the chat.

Applications

Powered by their latest PaLM 2 model, Google is putting AI in many of their consumer and enterprise products, including:

Duet AI for Workspace: A co-pilot like product will soon be coming to Documents, Sheets, Slides and more. It will help users to collaborate with AI to create better documents/slides and launch in the coming months.

Help me write in Gmail: Google will also be bringing the power of Generative AI to email, allowing users to auto generate entire emails, and will pull previous email correspondences and use them as relevant.

Apple

While Apple certainly has plans in the works, they have thus far been quite tight lipped, other than a few small product announcements / leaks such as a paid AI health coaching service.

During their most recent earnings call, the only discussion of AI came upon being asked a question about plans of AI, to which Tim Cook didn’t say much other than they view it as important and will continue to weave it in:

As you know, we don’t comment on product roadmaps. I do think it’s very important to be deliberate and thoughtful in how you approach these things, and there’s a number of issues that need to be sorted, as is being talked about in a number of different places. But the potential is certainly very interesting and we’ve obviously made enormous progress integrating AI and machine learning throughout our ecosystem, and we’ve weaved it into products and features for many years, as you probably know. You can see that in things like fall detection and crash detection and ECG. These things are not only great features, they’re saving people’s lives out there. And so it’s absolutely remarkable. And so we view AI as huge, and we’ll continue weaving it in our products on a very thoughtful basis.

I’ll leave the speculation for another post, but Apple surely has iOS and Siri improvements in the works leveraging the power of LLMs, despite not sharing much yet.

Thanks for reading! If you liked this post, give it a heart up above to help others find it or share it with your friends.

If you have any comments or thoughts, feel free to tweet at me.

If you’re not a subscriber, you can subscribe for free below. I write about things related to technology and business once a week on Mondays.